1 Introduction

In 1970, Marler 〚1〛 set the idea that there might be parallels between human speech and birdsong, emphasising the many common properties in vocal development of both infants and songbirds. One of the best-known features of human speech is the hemispheric specialization of the brain. Since Broca’s work in 1861 〚2〛, it has been shown that, in humans, the left hemisphere is predominant for the production and comprehension of speech 〚3〛, while the right hemisphere is involved in the perception of prosody and emotional content 〚4〛. The lateralisation found for the production of learned song in a number of passerine birds 〚5–9〛 is reminiscent of this hemispheric asymmetry. However, lateralisation of song production in birds remains under debate since it has been observed mainly at the peripheral level and the degree of lateralisation can vary between species 〚10〛. Regarding perception, as the two hemispheres of the telencephalon are not directly connected, it has often been taken for granted that communication signals were processed in the hemisphere that would have a dominant role in song production. However, this conclusion was drawn only from behavioural responses. For example, in a preliminary study (unpublished data), we observed that three out of four starlings made more head movements toward the left side while listening to their own whistles, whereas the fourth bird showed no change in the number of head movements toward the left or the right side. Moreover, there are very few physiological data on brain asymmetries for song perception and no study recorded responses to birdsong in both hemispheres within the same bird. Thus, in order to investigate lateralisation of song perception in birds, we made electrophysiological recordings in both hemispheres in European starlings (Sturnus vulgaris).

Lateralisation can be considered at two different levels: the population level and the individual level. Lateralisation phenomena observed at the population level reflects that individuals exhibit a systematic directional choice during asymmetric behaviours—in other words, that they are lateralised—and that most of them exhibit the same preferred direction. For example, rhesus monkeys having to recognise some objects tactually show a preference for the left hand at the group level 〚11〛. However, at the individual level, individuals may be lateralised, but an interindividual variability in the direction of the preference can be observed, so that no systematic bias toward a preferred direction emerges at the population level. For example, rhesus monkeys grabbing food on the ground exhibit individual preferences for one hand but no predominant tendency can be observed at the group level 〚12〛. Thus it is important to consider lateralisation of song perception in starling at the individual and at the population level in order to determine (i) whether there is electrophysiological evidence for lateralisation of song perception, and (ii) whether such lateralisation would emerge at the individual and/or the population level, that is whether such lateralisation would be consistent over different birds or would show inter-subject variability.

Starlings appear as a good model for a study of lateralisation because they offer a variety of discrimination levels among their songs. Their whistles are divided into two classes 〚13〛: class I encompasses whistles that are sung by all male starlings and that allow the recognition of species and population; class II groups together whistles proper to each individual or shared only by a few individuals. These two classes of whistles, as well as artificial non-specific sounds, were used in the present study to test for hemispheric dominance in song perception in adult starlings. Neuronal responses were recorded from the field L complex, as it is the main auditory area of the songbird brain. The field L complex is tonotopically organised and neurons in this area have been found to be highly selective towards some complex characteristics of the whistles 〚14–16〛.

2 Materials and methods

2.1 Experimental animals

Six adult male starlings caught in the wild were used. A stainless steel well was implanted stereotaxically on the skull under halothane anaesthesia. The implant was located precisely with reference to the bifurcation of the sagittal sinus. Birds were given a 2-day rest after implantation. During this time, and between experimental sessions, they were kept in individual cages. During the experiments, the well was used for fixation of the head and as a reference electrode. The bird was awake and wrapped in a wing cuff to prevent important body movement.

2.2 Acoustic stimulation

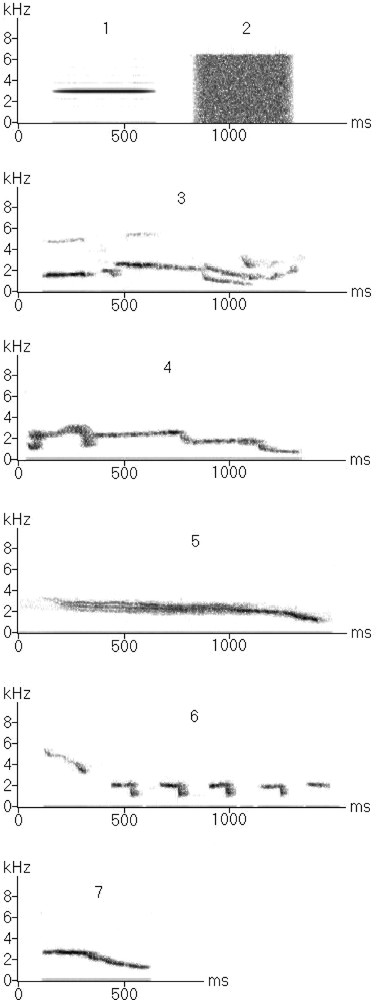

We used species-specific and artificial non-specific sounds as stimuli (Fig. 1). The species-specific stimuli (n = 19) were class I and class II whistles of the starling tested, of familiar starlings and of an unfamiliar starling. Non-specific stimuli (n = 2) were a pure tone (3 kHz) and white noise. These artificial stimuli were chosen because, as a preliminary approach of lateralisation, we wanted a restricted number of clearly different sounds. As starlings are able to mimic natural sounds, we intended to realise a more detailed study later where we could confront species-specific sounds and other natural sounds.

Examples of sonograms of the stimuli used. 1–2: Non-specific stimuli; 1: pure tone, 2: white noise. 3–7: species-specific whistles; 3, 4: individual whistles (class II) of an unfamiliar starling; 5–7: class I whistles, 5: simple theme, 6: rhythmic theme, 7: inflection theme.

The duration of the whole sequence of 21 stimuli was 27 s and it was repeated 10 times at each recording site. The minimum interval between stimuli was 100 ms. Auditory stimuli were delivered in an anechoic, soundproof chamber through a loudspeaker located 60 cm in front of the bird’s head. The maximum sound pressure at the bird ear was 85dB SPL.

2.3 Multi-unit recordings and data collection

The recording electrodes (FHC n°26-05-3) were made of tungsten wires insulated by glass. Electrodes impedance was in the range of 2—5 MΩ.

Multi-unit recordings were made alternatively in two sagittal planes in each hemisphere, respectively at 800 and 1000 μm of the medial plane. Each recording plane consisted of three penetrations respectively 2600, 2800, and 3000 μm rostral from the bifurcation of the sagittal sinus. These coordinates ensured that all recording sites were placed over the functional area NA-L of the field L complex 〚17〛. Recordings were made dorso-ventrally every 200 μm along the path of a single electrode penetration (15 to 20 recording sites per penetration); thus, in each bird, a total of about 100 sites were recorded in the field L of each hemisphere, which requires about two weeks of daily recording for each bird. All the data were collected during the breeding season.

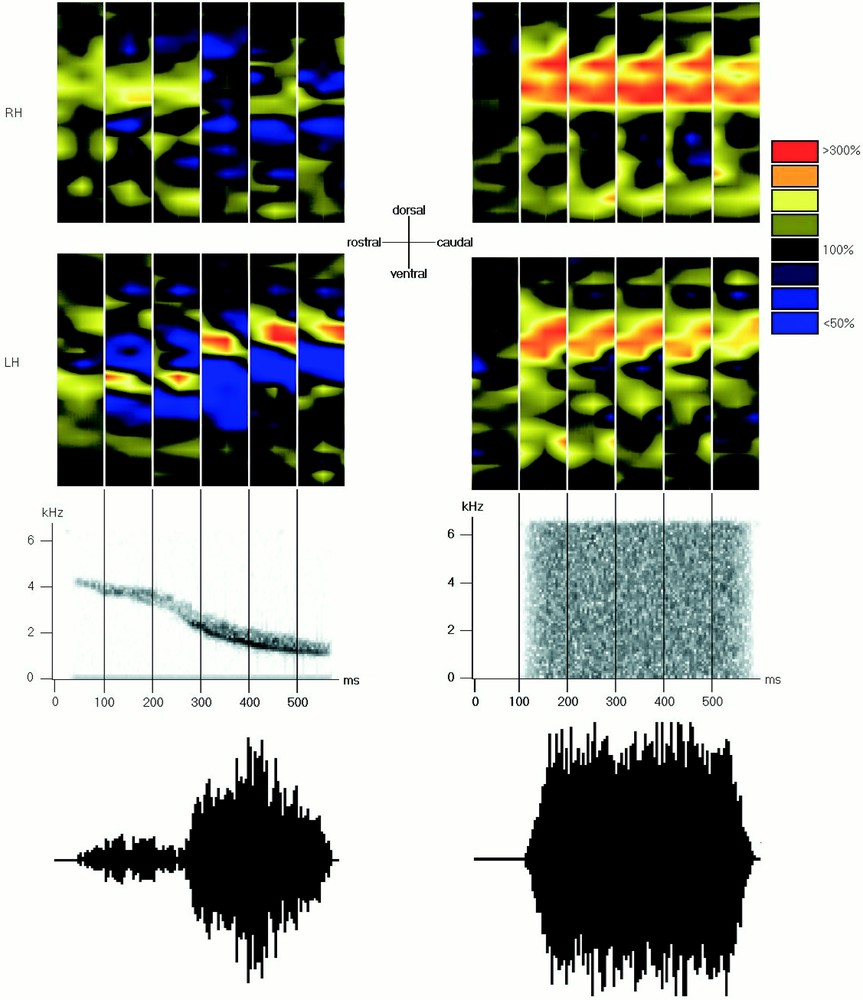

2.4 Response maps

Neuronal activity during stimuli presentation was recorded and digitized at a rate of 22 kHz. For each recording site and each stimulus, peristimulus time histograms (cumulated over the 10 stimulus repetitions) were calculated using a temporal resolution of 2 ms. Spontaneous activity was determined during 100 ms before the beginning of each auditory stimulus. Response strength was then defined as the ratio between evoked and spontaneous activity, given in percentage of spontaneous activity. The obtained values were plotted as response maps showing the spatial distribution of activity in each recording plane along time (see Fig. 2 for explanations).

Example of one activity map. This map represents the mean activity in one recording plane (consisting of three penetrations) pooled over 100 ms. The rostro-caudal position of the penetration (in μm) is on the x axis and the depth of the recording site (in μm) on the y axis. Each intersection corresponds to one recording site. Activation and inhibition are represented with different colours (presented on the right), from blue for the strongest inhibition to red for the strongest activation (100% = spontaneous activity, represented in black).

2.5 Statistical analysis

All the values obtained across all the recording sites of one hemisphere were collapsed for the purpose of statistical comparisons. Chi-square tests were used to compare : (1) the total number of spikes in each hemisphere over the whole duration of species-specific stimuli presentation with the total number of spikes in each hemisphere over the same duration without any stimulus presentation, (2) the total number of spikes in each hemisphere over the whole duration of artificial non-specific stimuli presentation with the total number of spikes in each hemisphere over the same duration without any stimulus presentation, and (3) the total number of spikes in each hemisphere over the whole duration of species-specific stimuli presentation with the total number of spikes in each hemisphere over the whole duration of artificial non-specific stimuli presentation.

3 Results

The activity maps of the six birds showed that the pattern of neuronal activity evoked in both hemispheres differed markedly between species-specific and artificial non-specific stimuli (Fig. 3). The analysis of the maps for each stimulus showed that the neuronal activity during the presentation of species-specific stimuli appeared systematically stronger in one hemisphere than in the other (Fig. 3). This was tested for each bird by comparing the neuronal activity during species-specific stimuli presentation with the spontaneous activity (see § Materials and methods). It showed that, in each bird, the neuronal activity evoked by species-specific stimuli was significantly stronger in one hemisphere than in the other (χ2 : df = 1, p = 0.01 for each bird). This effect was verified for the summed activation in response to the different species-specific sounds as well as for the vast majority of activation during each species-specific sound taken separately (Wilcoxon test : n = 19, p < 0.05 for each bird). Interestingly, the observed hemispheric asymmetry yielded inter-subject variability. Indeed, four birds exhibited a stronger activation in the right hemisphere (χ2 : df = 1, p = 0.01 for each bird) while the other two showed a stronger activation in the left hemisphere (χ2 : df = 1, p = 0.01 for each bird) (Fig. 4). By contrast, the difference in neuronal activity in both hemispheres was less clear during presentation of artificial non-specific stimuli (Fig. 3). More precisely, we compared the activity during artificial non-specific stimuli presentation with the spontaneous activity as well as with the activity during species-specific stimuli presentation (see § Materials and methods). It revealed that although four birds showed a significant lateralisation for artificial non-specific stimuli in the same direction as for the species-specific sounds (χ2: df = 1, p = 0.01 for each bird), this lateralisation was significantly reduced in comparison with that observed for species-specific sounds (χ2 : df = 1, p = 0.01 for each bird) (Fig. 4). For the other two birds, the pure tone and the white noise even evoked a stronger response in the hemisphere contralateral to that predominantly activated by species-specific stimuli (χ2: df = 1, p = 0.01 for each bird) (Fig. 4). Note that one of these two birds was ‘right-lateralised’ for the perception of species-specific sounds, while the other was ‘left-lateralised’. Thus, for each bird, the pattern of activation of both hemispheres was significantly different during species-specific stimuli presentation and during artificial non-specific stimuli presentation (χ2 : df = 1, p = 0.01 for each bird) (Fig. 4). This showed that species-specific stimuli evoked a lateralised activity, while such a lateralisation was reduced or even reversed for artificial non-specific control stimuli. Accordingly, when we considered the six birds, the hemisphere predominantly activated during species-specific stimuli was significantly more activated during species-specific sounds than during artificial non-specific ones (Wilcoxon : n = 6, p < 0.05).

Example for one bird (named bnb): activity maps obtained at 800 μm of the medial plane during a species-specific whistle (left panel) and white noise (right panel). This bird exhibited a stronger activation in the left hemisphere during species-specific whistles and a stronger activation in the opposite hemisphere during non-specific sounds. Note the tonotopy that appears on the map of the left panel. RH: right hemisphere; LH: left hemisphere. The series of maps show the time course of the neuronal activity during the stimuli with a time bin of 100 ms. Sonograms and oscillograms of the stimuli are presented beneath the maps with the same time scale.

Mean response strength (+) in the left (white bars) and right (black bars) hemispheres of the six birds (named bnb, bnrs, bno, bnvi, bnr, and bnbf) during species-specific and non-specific stimuli. The response strength is defined as the ratio between evoked and spontaneous activity over the whole duration of the stimuli, given in percentage of spontaneous activity.

4 Discussion

This study yields evidence for hemispheric asymmetry during the perception of auditory signals and shows that, at least at the individual level, the two hemispheres of the starling’s brain perceive and process species-specific and artificial non-specific sounds differently. In four birds, the right hemisphere seemed to process preferentially conspecific signals, while in the two remaining birds the left hemisphere seemed to do so. On the contrary, in all these birds, artificial non-specific stimuli tended to be processed more equally by both hemispheres or by the opposite hemisphere. This difference of response pattern during presentation of both types of stimuli showed that the difference of activation between the two hemispheres was not simply due to a shift in the recording planes nor to different auditory thresholds. Furthermore, as the multiunit recordings in the left and right hemispheres were made alternately, this dominance cannot be explained by the recording order. Note that the differences that appeared are mainly in terms of neuronal response strength, whereas we only observed some minor differences in terms of neuronal selectivity and responses to different types of species-specific songs that will be described in another paper (in preparation). However, the behavioural reactions to the bird’s own song (cf. Introduction) parallel the observations made in the field L of these birds: the birds which made more head movements towards the left side while listening to their own whistles exhibited a right hemisphere dominance for species-specific sounds, whereas the bird which showed no change in the number of head movements towards the left or the right side exhibited a left hemisphere dominance.

Our results are consistent with previous studies on the field L complex. We observed a tonotopic gradient from low to high frequencies running from dorso-caudal to rostro-ventral (Fig. 3). This was also observed by Capsius and Leppelsack in the subcentre NA-L of the field L complex 〚17〛, also called L2. However, our results also show functional differences between the two hemispheres, with inter-subject variability. Given that the two hemispheres of the telencephalon are not directly connected, and that field L projects to nuclei such as the NCM and the HVC, we should also find functional differences in these nuclei. This suggests that it would be interesting to record systematically in both hemispheres. Although some authors did not find any anatomical difference in the HVC between the two hemispheres of the starling’s brain 〚18〛, a functional difference could exist. Finally, the question of whether the lateralisation emerges at the level of the field L or whether it already exists in the nucleus ovoidalis, which is the main auditory input of the field L, remains unanswered.

The specialisation of one hemisphere in the processing of communicative signals is reminiscent of what was found with a behavioural approach in rhesus monkeys 〚19〛 and mice 〚20〛. Here, in the starling, our results suggest that, depending on individuals, either the left or the right hemisphere is preferentially involved in the perception of species-specific sounds relative to artificial non-specific sounds. However, due to the ability of starlings to mimic a variety of natural sounds, we only tested the difference between the processing of natural species-specific sounds and the processing of artificial sounds. Further studies would be necessary to determine whether the hemispheric specialisation that we observed is in the processing of species-specific sounds or in the processing of all natural sounds. Such a specialisation could improve the processing of natural sounds that are heard every day by increasing the computational speed and avoiding possible conflicting computation by the other side of the brain 〚21〛. There are also similarities with what happens in the visual system of other birds: in the chick, the right hemisphere is especially concerned with global attention and the left one with selecting cues, which allow stimuli to be assigned to categories 〚22〛.

Although we do not know which individual factors determine the hemispheric dominance, nor the precise nature of this phenomenon, there seems to be a real functional asymmetry in the brain of the European starling. We failed to find a population bias, either because there is not any or because of the number of birds tested. However individual variations are regularly found in studies of lateralisation. The best known example is the human lateralisation: 70% of the left-handed persons process speech in the left hemisphere as do 96% of the right-handed people, but 15% process speech in the right hemisphere and 15% in both hemispheres 〚23〛. The lateralisation of the perception of song described in this paper thus adds to the many parallels between the avian and human systems that have become paradigmatic of vocal communication.

Acknowledgements

We thank Gregory F. Ball for his helpful comments on the manuscript. This work was supported by ACI Cognitique No. COG169B.

Version abrégée

En 1970, Marler émit l’idée d’un parallèle entre le langage humain et le chant des oiseaux, soulignant les nombreuses analogies du développement vocal des enfants et des oiseaux chanteurs. Une des caractéristiques les plus connues du langage humain est la spécialisation hémisphérique du cerveau. Chez les oiseaux, la latéralisation pour la production de chants appris observée chez de nombreux passereaux rappelle cette dominance hémisphérique. Cependant, elle a été observée principalement au niveau périphérique et le degré de latéralisation peut varier d’une espèce à l’autre. À partir de ces observations, il a souvent été conclu que les signaux de communication étaient traités dans l’hémisphère ayant un rôle dominant dans la production du chant. Néanmoins, cette conclusion est uniquement basée sur des réponses comportementales et nous ne disposons que de très peu de données électrophysiologiques sur l’aspect perceptuel de la latéralisation. Le but de cette étude est donc de déterminer si la perception du chant chez les étourneaux (Sturnus vulgaris) est représentée de façon inégale dans les deux hémisphères du cerveau.

La latéralisation pouvant être considérée au niveau individuel et au niveau populationnel, nous avons situé notre étude à ces deux niveaux.

Les étourneaux apparaissent comme un bon modèle pour l’étude de la latéralisation, car ils offrent une variété de niveaux de discrimination dans leurs chants. Des sifflements correspondant à ces niveaux de discrimination, ainsi que des sons non spécifiques artificiels, ont donc été utilisés pour déterminer si certains signaux étaient traités par un hémisphère plutôt que par l’autre. Plus précisément, afin de tester la dominance hémisphérique pour la perception du chant, nous avons enregistré les réponses neuronales à ces sons dans le champ L d’étourneaux adultes. Le champ L a été choisi, car il constitue l’aire auditive principale du cerveau des oscines. D’autre part, il montre une tonotopie et les neurones qu’il contient sont sélectifs envers des caractéristiques complexes des sifflements.

Les enregistrements électrophysiologiques ont été réalisés sur six étourneaux mâles adultes capturés dans la nature puis gardés en cages individuelles. Ces enregistrements ont été réalisés de manière séquentielle dans deux plans sagittaux dans chaque hémisphère. Des stimuli auditifs spécifiques et non spécifiques ont été utilisés. Les stimuli spécifiques étaient des sifflements de l’étourneau testé, d’étourneaux familiers et d’un étourneau non familier. Les stimuli non spécifiques étaient un son pur (3 kHz) et un bruit blanc. Pendant les enregistrements, les oiseaux étaient éveillés.

L’activité neuronale évoquée par les stimuli a été enregistrée et digitalisée avec une fréquence d’échantillonnage de 22 kHz. À partir de ces données, pour tous les sites d’enregistrement et tous les stimuli, les PSTHs (Peristimulus Time Histograms) ont été calculés avec une résolution de 2 ms. L’activité spontanée ayant été déterminée pendant les 100 ms précédant chaque stimulation auditive, l’intensité de la réponse a ensuite été définie comme le rapport entre l’activité évoquée et l’activité spontanée, en pourcentage de l’activité spontanée. Les valeurs obtenues ont finalement été portées sur des cartes d’activité montrant la distribution spatiale de l’activité dans chaque plan d’enregistrement au cours du temps.

L’observation des cartes d’activité des six oiseaux montre que l’activité neuronale évoquée par les stimuli spécifiques et non spécifiques diffère nettement entre les deux hémisphères. L’analyse des cartes pour chaque stimulus montre que l’activité neuronale pendant la présentation des sons spécifiques apparaît toujours latéralisée et révèle une variabilité interindividuelle. En effet, quatre oiseaux montrent une activation significativement plus forte dans l’hémisphère droit et les deux autres dans l’hémisphère gauche. En revanche, cette différence d’activité apparaît moins clairement pendant la diffusion des sons non spécifiques artificiels. Dans ce dernier cas, la différence d’activation entre les deux hémisphères est significativement réduite chez quatre oiseaux, tandis que, chez les deux autres, les sons non spécifiques artificiels provoquent même une activation significativement plus forte dans l’hémisphère qui n’était pas le plus fortement activé par les sons spécifiques. Nous observons donc que les stimuli spécifiques évoquent une activité latéralisée, alors que cette latéralisation est réduite, voire inversée, pour les stimuli non spécifiques artificiels. Effectivement, lorsque nous considérons les données obtenues pour les six oiseaux, l’hémisphère qui prédomine pendant les sons spécifiques est significativement plus activé pendant les sons spécifiques que pendant les sons non spécifiques artificiels.

Cette étude montre donc que, au moins au niveau individuel, les deux hémisphères du cerveau des étourneaux perçoivent et traitent les signaux spécifiques et non spécifiques différemment. Chez quatre oiseaux, l’hémisphère droit semble traiter préférentiellement les signaux spécifiques et, chez les deux autres, l’hémisphère gauche. Au contraire, chez tous les oiseaux, les stimuli non spécifiques artificiels ont tendance à être traités plus équitablement par les deux hémisphères ou par l’hémisphère opposé.

Bien que nous ne connaissions ni les facteurs individuels qui déterminent la dominance hémisphérique, ni la nature précise de ce phénomène, une réelle asymétrie fonctionnelle semble exister. La latéralisation de la perception du chant décrite ici s’ajoute aux nombreux parallèles entre l’oiseau et l’homme, qui sont devenus paradigmatiques de la communication vocale.