1. Introduction

As out-of-equilibrium phases, glasses exhibit a complex disordered atomistic structure that can virtually accommodate the entire periodic table [Mauro 2018; Musgraves et al. 2019; Zanotto and Coutinho 2004]. This structural complexity has historically limited our ability to unveil structure–property relationships in glasses [Bapst et al. 2020; Tanaka et al. 2019, 2010], but, in turn, offers a vast design space to discover new glasses with unusual properties [Liu et al. 2019d; Onbaşlı et al. 2018; Ravinder et al. 2020]. Although different experimental protocols have been developed to investigate the nature of the linkages between glass composition, structure, and properties [Almeida and Santos 2015; Kamitsos 2015; Salmon and Zeidler 2015; Youngman 2018], they yield a series of structural fingerprints that are experimentally accessible—e.g., pair distribution function (PDF) computed by diffraction experiments or coordination numbers accessed by nuclear magnetic resonance (NMR) [Fischer et al. 2005; Kroeker 2015; Wright 1988; Youngman 2018]—rather than offering a direct access to the three-dimensional atomic structure itself [Affatigato 2015; Greaves and Sen 2007; Huang et al. 2013; Yang et al. 2021; Zhou et al. 2021]. In that regard, atomistic simulations have become a routine tool to easily access the atomistic structure of glasses [Bauchy 2019; Du 2019; Pedone 2009]—which is otherwise invisible from conventional experiments [Pandey et al. 2016b, a]—and to decipher the physical nature of the linkages between glass structure and properties [Binder and Kob 2011; Massobrio 2015; Onbaşlı and Mauro 2020]. Atomistic simulations of glasses can be broadly divided into two different families, namely, knowledge-based and data-based.

1.1. Physical-knowledge-based simulations

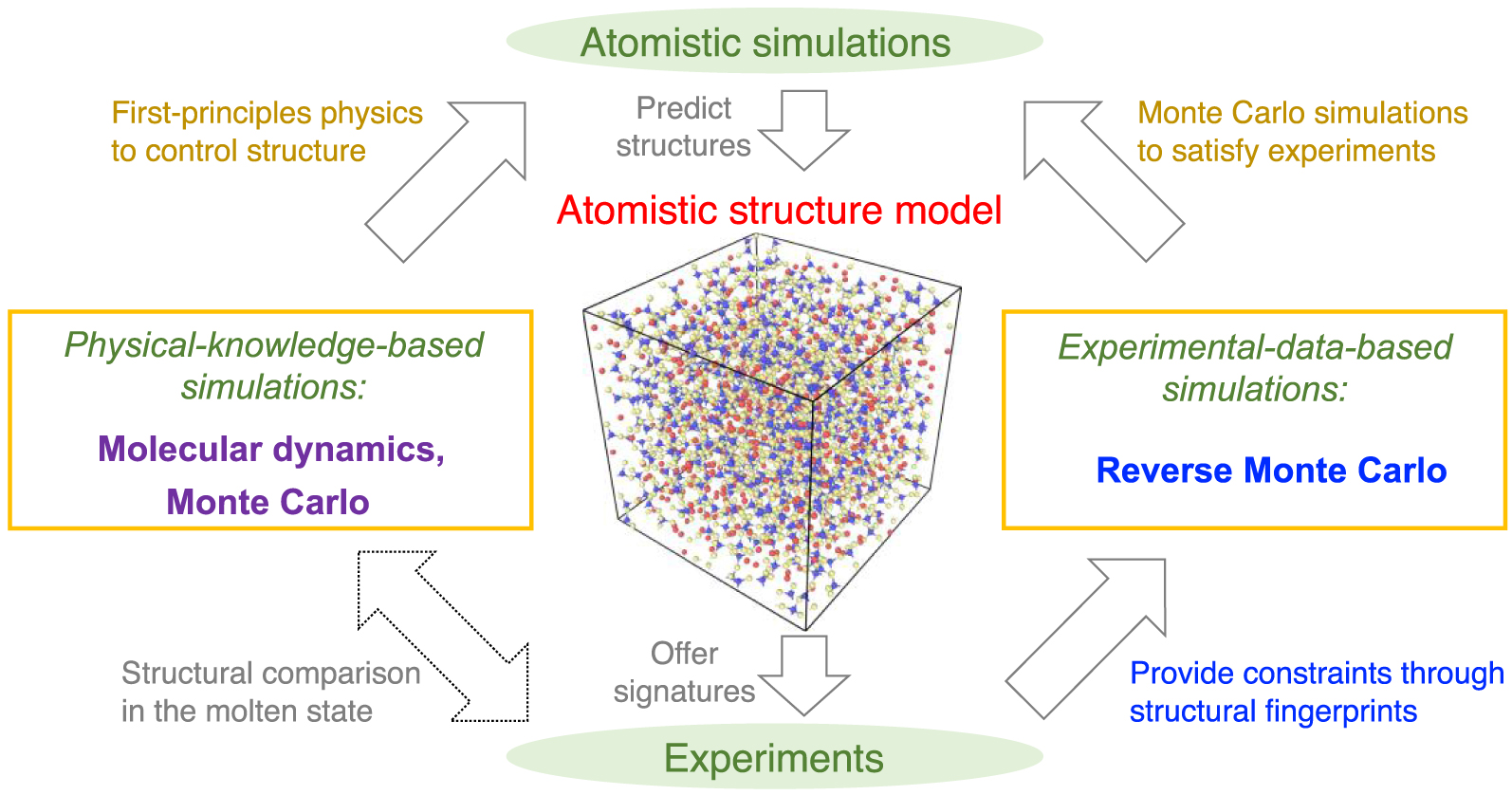

Schematic illustrating the atomistic simulation of glasses through (i) physical-knowledge-based simulations, e.g., molecular dynamics (MD) simulations [Soules 1990], and (ii) experimental-data-based simulations, e.g., reverse Monte Carlo (RMC) simulations [McGreevy 2001]. Simulations offer a direct access to the atomistic structure of glasses that can be validated by experiments, while, in turn, experiments can offer some fingerprints of the atomistic structure that can be used as simulation constraints. Note that, despite the pronounced difference of timescale between experiments and MD simulations, a direct comparison between MD and experimental data is possible for melts—since the structure and properties of equilibrium liquids and metastable-equilibrium supercooled liquids do not depend on their thermal history [Le Losq et al. 2017].

As a primary goal, atomistic simulations aim to reveal the atomistic structure of glasses [Mauro et al. 2016; Takada 2021]. This can be achieved by explicitly simulating the time-dependent formation process of glasses—e.g., melt-quenching [Debenedetti and Stillinger 2001], sol–gel transition [Du et al. 2018], vapor deposition [Wang et al. 2020], irradiation [Krishnan et al. 2017a, b], or annealing [Grigoriev et al. 2016]. Such simulations rely on our knowledge of the physics governing the interaction between atoms, i.e., the interatomic forcefields (see Figure 1) [Massobrio 2015; Mauro et al. 2016; Onbaşlı and Mauro 2020]. In detail, starting from the sole knowledge of first-principles electron interactions [Hohenberg and Kohn 1964; Kohn and Sham 1965], one can compute the interatomic interactions acting in a glass system [Boero et al. 2015; Hafner 2008]. In turn, this interatomic forcefield drives the motions of the atoms as per Newton’s law of motion—which is the basic principle behind molecular dynamics (MD) simulations [Alder and Wainwright 1959; Takada 2021]. In other words, MD simulations predict the spontaneous motions of the atoms in glasses (or liquids) [Durandurdu and Drabold 2002; Micoulaut et al. 2013]. However, since MD simulations are generally limited to short timescales (typically up to a few nanoseconds) [Lane 2015], it is intrinsically unable to match experimental timescale (up to days) [Li et al. 2017]. In particular, as the most common method to prepare a glass [Mauro and Zanotto 2014], the melt-quenching process consists in melting a liquid that is then cooling into a glass. However, the cooling rates that are accessible to MD simulations (1014–109 K/s) are orders of magnitude larger than those experienced in conventional experiments (102–100 K/s) [Li et al. 2017; Vollmayr et al. 1996a, b]. Note that, although the short timescale accessible to MD simulations challenges the comparison between glasses prepared by melt-quenching experiments and MD simulations, it should be pointed out that direct comparisons are possible when the simulated system is at equilibrium (liquid) or metastable equilibrium (supercooled liquid) since the structure and properties of such systems do not depend on their thermal history (see Figure 1) [Le Losq et al. 2017].

To overcome the short timescale accessible to MD simulations [Li et al. 2017], various simulation techniques have been proposed to accelerate the exploration of a glass’s potential energy landscape (PEL)—which represents the topography of the potential energy of a system as a function of its atom positions [Debenedetti and Stillinger 2001; Lacks 2001; Lacks and Osborne 2004]. Although MD simulates the real, spontaneous evolution of the system within its PEL, its limited timescale typically prevents large energy barriers to be overcome [Yu et al. 2017b, 2015]. In contrast, accelerated simulation techniques tend to push systems across energy barriers to accelerate their dynamics, but such accelerated dynamics may not always match the spontaneous dynamics of the atoms [Bauchy et al. 2017; Fullerton and Berthier 2020; Liu et al. 2019a]. As one of the most simple sampling technique, energy-based Monte Carlo (MC) simulations aim to explore the PEL of a glass by performing a series of random “moves” (e.g., by displacing a randomly selected atom) [Allen and Tildesley 2017; Utz et al. 2000] so as to discover lower-energy states in the PEL [Arceri et al. 2020; Vollmayr-Lee et al. 2013; Welch et al. 2013]. However, since MC moves do not necessarily reproduce the spontaneous dynamics of a glass as it relaxes toward lower-energy states, it is not guaranteed that the simulated glass matches that formed by experiments [Berthier and Ediger 2020].

1.2. Experimental-data-based simulations

To overcome the limitations of simulations based on physical knowledge [Berthier and Ediger 2020; Li et al. 2017; Wright 2020], simulations based on experimental data—e.g., reverse Monte Carlo (RMC) simulations [Biswas et al. 2004; Keen and McGreevy 1990] or empirical potential structure refinement (EPSR) simulations [Nienhuis et al. 2021; Soper 2005; Weigel et al. 2008]—have been proposed to invert experimental data (i.e., structural signatures of the real underlying atomic structure) into a three-dimensional atomic structure. In detail, RMC simulations adopt an MC search algorithm [Allen and Tildesley 2017] wherein a series of MC moves (e.g., random displacement of atoms) is performed so as to eventually obtain a glass structure that satisfies the structural constraints provided by experiments [McGreevy 2001; McGreevy and Pusztai 1988]. In the same spirit, EPSR simulations reduce the difference between simulation and experimental data by iteratively tuning the coefficients of a predefined empirical potential until the structure simulated by MD matches experiments. As compared to RMC, EPSR simulations tend to yield more realistic structures (which satisfy some basic stability constraints imposed by the empirical potential). However, the accuracy of EPSR simulations can be limited by the choice of the analytical form that is adopted for the empirical potential [Nienhuis et al. 2021; Soper 2005; Weigel et al. 2008].

Figure 1 shows a schematic illustrating the interactions between experiments and simulations. On the one hand, experiments can extract various signatures (e.g., PDF computed by diffraction experiments [Wright 1988]) of the atomic structure of glasses [Affatigato 2015], which can be used to validate knowledge-based simulations. On the other hand, RMC simulations can utilize these structural signatures as constraints to “inversely” construct an atomistic structure that satisfies available experimental data [McGreevy 2001]. It is notable that simulations based on experimental data effectively bypass any explicit glass formation process (e.g., melt-quenching) and, hence, are not affected by the limited timescale accessible to atomistic simulations. However, such simulations cannot offer any physical insights in the dynamics of the atoms in a glass [Bottaro and Lindorff-Larsen 2018; Pandey et al. 2016b; Yu et al. 2015].

In the following, we briefly review the state of the art in atomistic simulations of glasses in Section 2. The present challenges facing these simulations are discussed in Section 3. To address these challenges, we then highlight several new opportunities to advance the atomistic modeling of glasses in Section 4. Finally, we offer some concluding remarks in Section 5.

2. Overview of the state of the art in atomistic simulations of glasses

2.1. Basic principles of atomistic simulations of glasses

2.1.1. Newton’s law of motion

As an alternative classification, simulations can be broadly divided in terms of their description of the atomic motion. Namely, (i) MD simulations offer a direct description of the spontaneous dynamics of the atoms as per Newton’s law of motion, whereas (ii) other types of simulations (e.g., MC or RMC simulations) simply aim to construct an atomic structure based on a target objective (e.g., minimizing energy and maximizing agreement with experiments) without any explicit description of the dynamics of the atoms [Allen and Tildesley 2017; Takada 2021]. MD simulations predict the motion of the atoms that is predicted by Newton’s law of motion (see Figure 2a) [Alder and Wainwright 1959; Pedone 2009]. This requires the knowledge of the interatomic forcefield, that is, the force experienced by each atom. Such forces can comprise radial 2-body interactions, angular 3-body interactions, and/or many-body interactions [Daw and Baskes 1983; Stillinger and Weber 1985] and play a key role in predicting atom trajectories [Du 2015; Huang and Kieffer 2015]. In practice, the interatomic forcefield can be accurately computed using first-principles electron-level methods (e.g., ab initio MD simulation [Boero et al. 2015; Hafner 2008]) or can be approximately estimated by some empirical functions (i.e., classical MD simulation [Carré et al. 2016, 2008]). More technical details are provided in Sections 2.3 to 2.6.

2.1.2. Minimum search in a cost function landscape

Unlike MD simulations [Massobrio 2015], RMC [McGreevy 2001], energy-based MC [Allen and Tildesley 2017; Liu et al. 2019f; Utz et al. 2000], or energy minimization based on gradient descent [Bitzek et al. 2006; Shewchuk 1994] do not follow Newton’s law of motion. Rather, these approaches rely on exploring and finding the minimum position of a given “cost landscape” (e.g., potential energy for MC and energy minimization, or a loss difference between simulated and experimental fingerprints for RMC) via a series of structural modifications (e.g., displacing atoms) [McGreevy 2001; Takada 2021]. For instance, Figure 2b illustrates the principle behind energy-based MC simulations [Allen and Tildesley 2017], wherein the MC simulation searches the global minimum within the PEL by performing a series of tentative MC moves. Each move is either accepted or rejected according to a given acceptance probability defined in the MC algorithm (see Section 2.2) [Liu et al. 2019f; McGreevy and Pusztai 1988]. In contrast to MD simulations wherein large energy barriers are unlikely to be overcome [Debenedetti and Stillinger 2001; Liu et al. 2019a], simulations based on sampling a PEL can “accelerate” atomic motion to (i) jump over energy barriers and (ii) move toward the minimum energy state [Utz et al. 2000; Yu et al. 2015], which corresponds to the most stable energy state that the glass relaxes toward upon aging/relaxation [Welch et al. 2013]. Note that the landscape to explore (i.e., the function to minimize) is not always the potential energy [Debenedetti and Stillinger 2001; Tang et al. 2021], but, rather, can take the form of any function, e.g., a loss function capturing the structural difference between simulated and experimental glass in the case of RMC simulations [McGreevy 2001]. More technical details on RMC are provided in Section 2.2.

(a) Illustration of a molecular dynamics (MD) simulation of a glass system, wherein, starting from an initial configuration, the motion of the atoms is determined based on the interatomic interactions following Newton’s law of motion. (b) Illustration of a Monte Carlo (MC) simulation, wherein an MC search algorithm (e.g., energy-based Metropolis algorithm [Allen and Tildesley 2017]) is used to find the minimum state (e.g., minimum energy) of a glass system within a cost function landscape—e.g., potential energy landscape (PEL), namely, a system’s potential energy as a function of its atom positions [Lacks 2001]. The landscape is sampled by performing a series of MC moves (e.g., random displacement of an atom) [Takada 2021].

2.2. Reverse Monte Carlo (RMC) simulations

2.2.1. Cost function

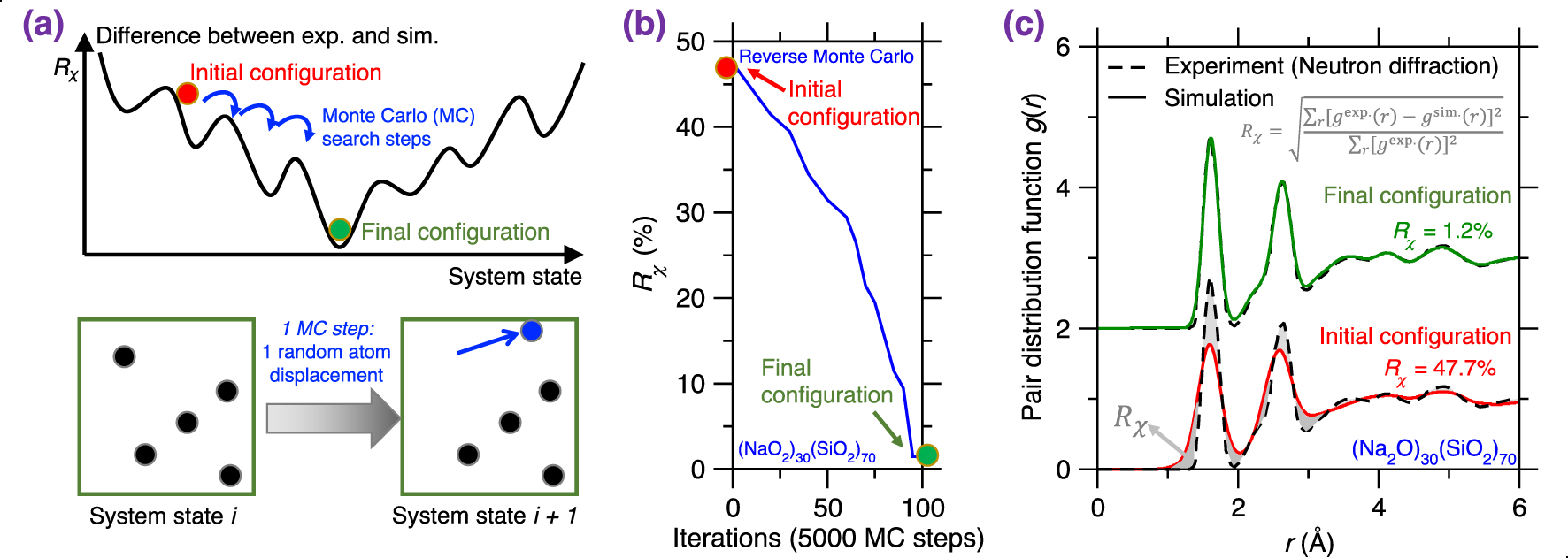

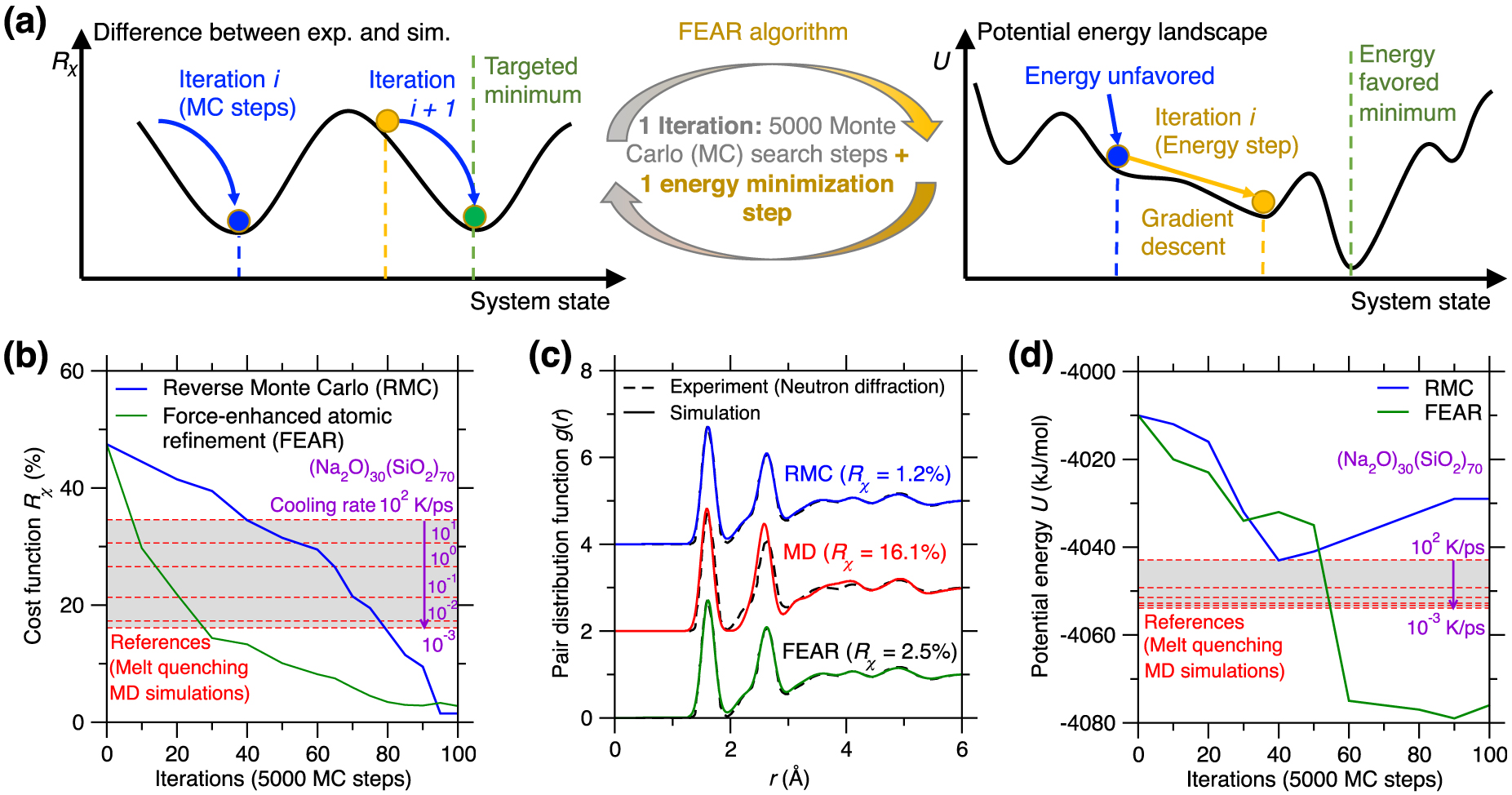

RMC simulations rely on the MC search algorithm to generate an atomistic structure satisfying experimental structural constraints (i.e., measured structural fingerprints) [Keen and McGreevy 1990; McGreevy 2001; McGreevy and Pusztai 1988]. In detail, the simulation searches for the global minimum in a cost function landscape as a function of the system’s atom positions (see upper panel in Figure 3a) by performing a series of random atomic displacements (“MC move”, see lower panel in Figure 3a) [McGreevy 2001]. Here, the cost function R𝜒 is defined as the magnitude of difference between a simulation result and its experimental reference—often, the simulated and experimental PDFs g(r), formulated as follows [Wright 1993; Zhou et al. 2020]:

| (1) |

2.2.2. Acceptance rate of RMC search

In analogy to the conventional energy-based Metropolis MC algorithm [Allen and Tildesley 2017], the acceptance probability P of each MC move can be expressed as [Zhou et al. 2020]:

| (2) |

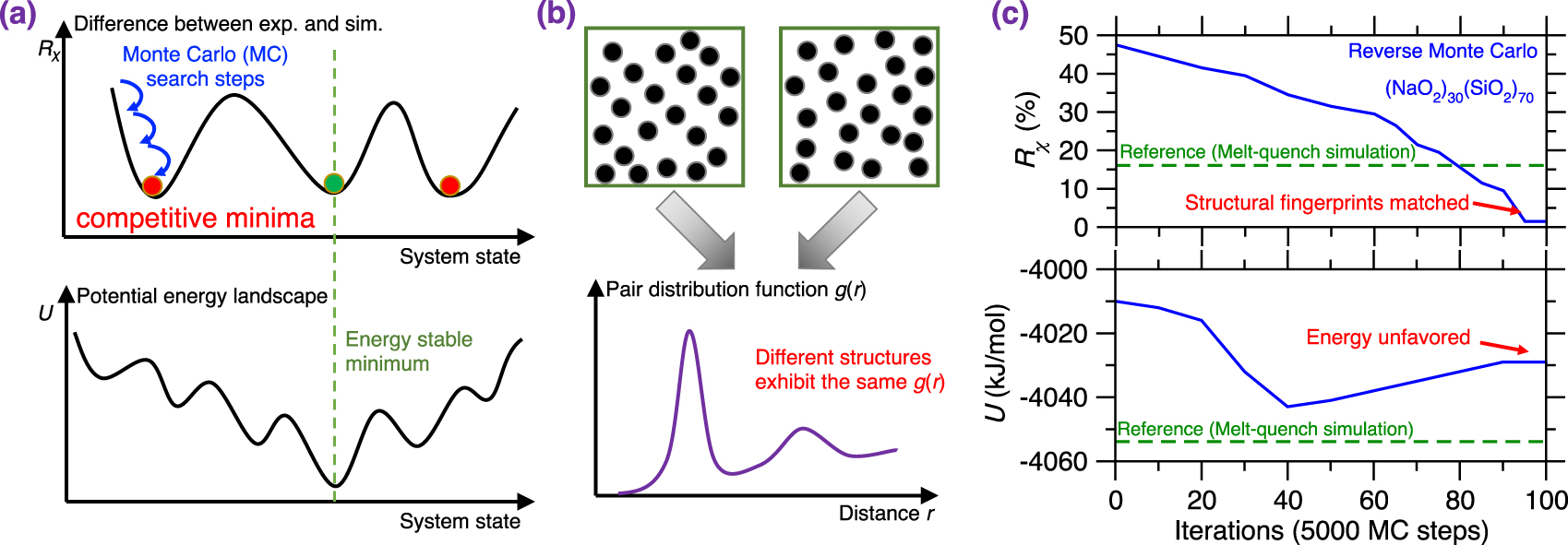

(a) Illustration of a Reverse Monte Carlo (RMC) simulation based on the Monte Carlo (MC) search algorithm to find the glass configuration that minimizes a given cost function [McGreevy 2001], where the cost function R𝜒 is defined as the magnitude of difference between a simulation result and its experimentally measured reference. Each MC search step consists of randomly displacing a randomly selected atom. (b) Evolution of the cost function R𝜒 as a function of the number of MC search steps during an RMC simulation of a sodium silicate glass ((Na2O)30(SiO2)70) [Zhou et al. 2020]. R𝜒 is defined herein as the magnitude of difference between the simulated and experimental neutron PDF g(r) (see (1)). (c) Comparison between the simulated g(r) and its experimental reference both at the start and end of the RMC simulation [Zhou et al. 2020]. The gray area represents the difference (i.e., R𝜒) between the experimental and simulated PDF.

2.2.3. Structural match to experimental data

RMC simulations generally yield atomistic structures that exhibit an excellent agreement with the experimental data that are used to define the cost function (e.g., PDF) [Keen and McGreevy 1990], which is not surprising since RMC solely aims to minimize the difference between simulated and experimental structural data. Figure 3b shows the evolution of the cost function R𝜒 as a function of the number of MC search steps during the RMC simulation of a sodium silicate glass ((Na2O)30(SiO2)70) [Zhou et al. 2020], wherein the cost function is defined based on the neutron PDF. As expected, R𝜒 gradually decreases and, finally, the simulation generates an atomistic structure that exhibits an excellent match with experimental data. Further, Figure 3c shows the PDF g(r) computed both at the start and end of the RMC simulation. As expected, the final configuration exhibits an excellent agreement with the experimental reference, i.e., the measured g(r) [McGreevy 2001; Pandey et al. 2015; Zhou et al. 2020].

RMC simulations have successfully been used to reveal the structure of a variety of glasses or amorphous solids, especially in the case of systems that are too complex to be simulated by MD or for which no reliable empirical forcefield is available. Example of simulated systems include nuclear waste glasses [Bouty et al. 2014], metal-organic frameworks (MOFs) [Beake et al. 2013; Gaillac et al. 2017], calcium carbonate [Fernandez-Martinez et al. 2013; Goodwin et al. 2010], etc. Overall, RMC simulations offer a powerful tool to model glasses when accurate experimental data are available [Playford et al. 2014].

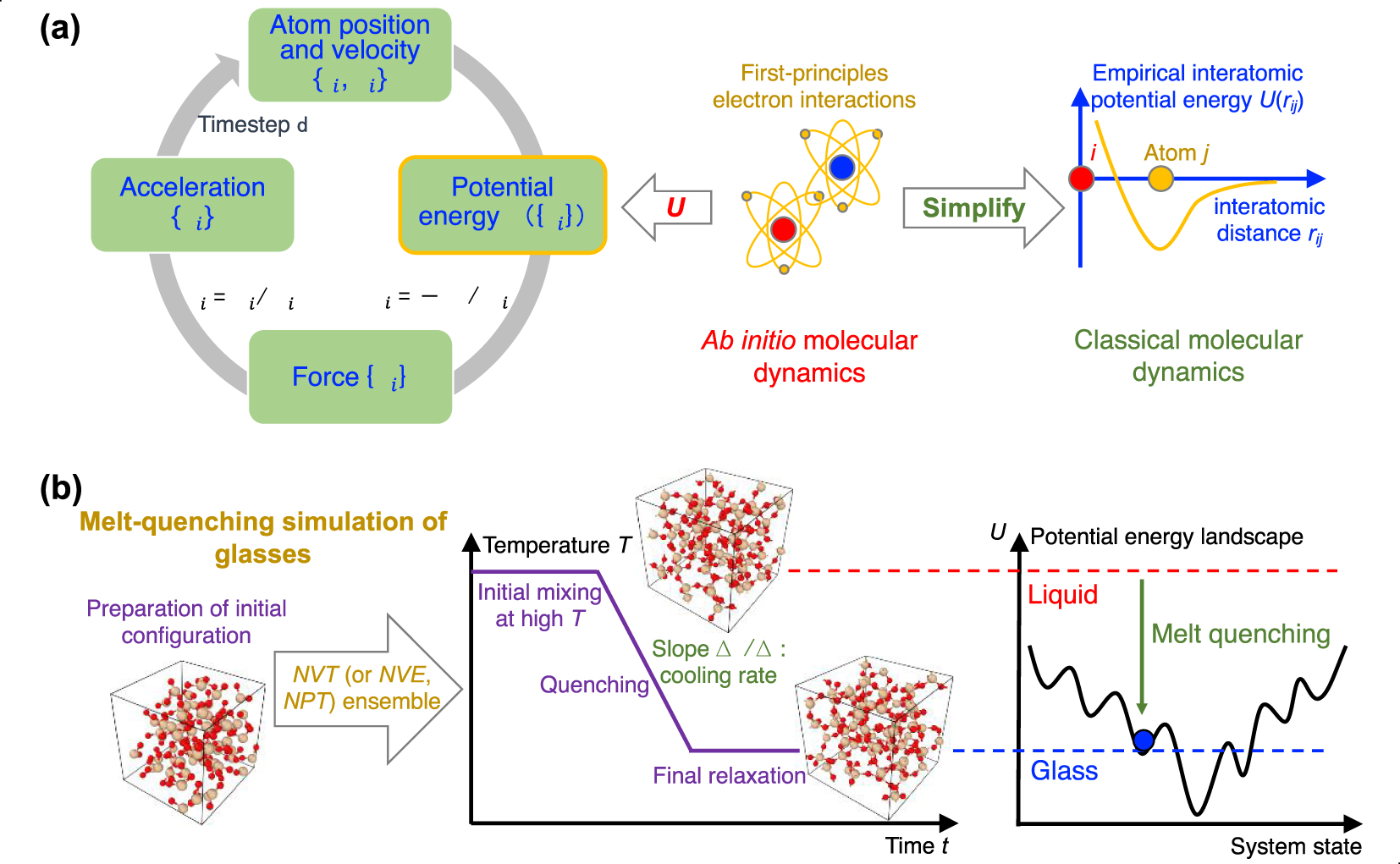

(a) Numerical algorithm of molecular dynamics (MD) simulation, which consists of an iterative succession of four computational steps (see text for details) [Takada 2021]. Ab initio MD computes the potential energy U({ri}) based on first-principles electronic interactions [Boero et al. 2015], whereas, in classical MD, the interatomic interaction is approximately estimated by some empirical functionals [Huang and Kieffer 2015]. (b) Schematic illustrating a melt-quenching simulation for glassy silica. After an initial SiO2 configuration is prepared, the system is melted under high temperature in the NVT ensemble (or other ensembles, e.g., NPT ensemble), quenched with a given cooling rate to a low temperature, and finally relaxed at this temperature [Carré et al. 2016]. From the viewpoint of the potential energy landscape (PEL) [Wilkinson and Mauro 2021], starting from the high-energy, ergodic liquid state, the system gradually evolves to some nonergodic, low-energy states and finally gets trapped in a local minimum corresponding to a glassy state [Debenedetti and Stillinger 2001; Goldstein 1969].

2.3. Molecular dynamics (MD) simulations

MD simulations rely on interatomic forcefields to predict the trajectory of the atoms according to Newton’s law of motion [Alder and Wainwright 1960, 1959; Rahman and Stillinger 1971; Stillinger and Rahman 1974]. Despite their short timescale [Li et al. 2017], MD simulations reproduce the formation process of glasses (e.g., melt quenching process under high cooling rate) to form the atomistic structure of a glass [Bauchy 2014; Micoulaut et al. 2013]. In this section, we provide some general guidelines to implement MD simulations of glasses.

2.3.1. Numerical algorithm

The algorithm of MD simulations consists of a loop of four successive steps (see Figure 4a) [Allen and Tildesley 2017; Takada 2021], namely, (i) computing the system’s potential energy U({ri}) by summing up all interatomic interactions for the current atom positions {ri}, (ii) calculating the resultant force {Fi} experienced by each atom i via energy differentiation (i.e., Fi = −∂U∕∂ri), (iii) obtaining each atom’s acceleration {ai} from {Fi} as per Newton’s law of motion, that is, ai = Fi∕mi, where mi is the mass of atom i, and finally, (iv) updating the atom positions and velocities after a small, fixed timestep via numerical integration (e.g., Verlet or leapfrog algorithm) [Allen and Tildesley 2017]. Eventually, this four-step loop yields the position of the atom as a function of time, that is, the trajectory of each atom.

It should be pointed out that the accuracy of an MD simulation depends on (i) the realistic nature of the initial configuration (i.e., initial positions and velocities), (ii) the value of the integration timestep, and, importantly, (iii) the accuracy of the interatomic potential energy. First, in the case of the glasses, the initial configuration is usually constructed by randomly placing some atoms in a cubic box while ensuring the absence of any unrealistic overlap (see below). The configuration is then relaxed at elevated temperature until the system loses the memory of its initial configuration. At this point, the outcome of the simulation does not depend any longer on how realistic the initial configuration was. Second, the integration timestep needs to be small enough (∼1 fs) to ensure the accuracy of the numerical integration. In practice, one can check that the timestep is small enough by ensuring the conservation of the system’s total energy and linear/angular momentum during a simulated dynamics in the microcanonical (NVE) ensemble [Grubmüller et al. 1991; Levesque and Verlet 1993; Omelyan et al. 2002]). Third, if the integration timestep is small enough, the accuracy of the MD simulation primarily depends on that of the underlying potential energy (see Figure 4a) [Du 2015; Huang and Kieffer 2015].

Relying on the most fundamental quantum mechanics describing electron interactions (i.e., first-principles calculation of electron interactions) [Hohenberg and Kohn 1964; Kohn and Sham 1965], the interatomic interactions can be accurately computed by conducting first-principles MD simulation (i.e., ab initio MD simulation [Boero et al. 2015], see Section 2.4). Such simulations are computationally expensive but can be used to validate or inform classical MD simulations relying on simplified, empirical potential energy functionals (see Section 2.5) [Carré et al. 2008].

2.3.2. Thermodynamical ensembles

A direct numerical solution of Newton’s law of motion yields the microcanonical NVE dynamics of the N atoms, that is, wherein the total energy and volume of the system are conserved. However, the NVE ensemble does not always mimic experimental conditions. In practice, a system can either be isolated from the rest of the universe (i.e., presenting a constant energy) or be in contact with a thermostat (wherein the thermostat can provide or receive energy so as to fix the temperature T of the system). The volume V of the system can also either be fixed or variable based on the pressure P imposed by a barostat. As such, the most common thermodynamic ensembles used in MD simulations are the microcanonical (NVE), canonical (NVT), and isothermal–isobaric (NPT) ensembles [Du 2019; Leach 2001]. Simulating the motion of the atoms in a non-NVE ensemble (e.g., NVT or NPT) requires slight modification to the constitutive equations used to calculate the acceleration of each atom [Allen and Tildesley 2017; Du 2019]. On the one hand, simulating a constant-temperature dynamics requires the use of a thermostat-based MD algorithm (e.g., Nosé–Hoover thermostat [Hoover 1985; Nosé 1984,?]) to adjust on-the-fly the atomic velocities so as to achieve a target temperature [Andersen 1980; Martyna et al. 1996]. On the other hand, simulating a constant-pressure dynamics involves the use of a barostat-based MD algorithm [Allen and Tildesley 2017; Leach 2001] (e.g., Nose–Hoover barostat [Martyna et al. 1992]) to adjust on-the-fly the system’s volume so as to achieve a target pressure (or state of stress) [Martyna et al. 1996; Tuckerman et al. 2006]. More details can be found in Allen and Tildesley [2017], Leach [2001].

2.3.3. Melt-quenching simulations

The most common method used in MD to prepare a glass consists in mimicking the experimental melt-quenching process [Soules 1990]. The melt-quenching process is detailed in the following and illustrated by Figure 4b for the case of a silica glass [Carré et al. 2008; Liu et al. 2019e].

First, an initial configuration must be created. To this end, one option is to start with a crystalline configuration (e.g., α-quartz in the case of a silica glass). However, this is often not the preferred option as (i) there might not exist a crystal with the same composition as that of the target glass, (ii) the crystal might have a very different density from that of the glass, (iii) the shape of the unit cell of the crystal (e.g., triclinic unit cell) might result in an unnecessarily complicated simulation box geometry, and (iv) melting the initial crystalline structure might require high temperature and/or long simulation time. As an alternative—often preferred—option, the initial configuration can be prepared by randomly placing atoms or molecules (e.g., SiO2 molecules in the case of a silica glass) into a cubic simulation box with periodic boundary conditions (PBC) [Leach 2001]. The box size can either be fixed so as to match the experimental density of the glass (if when the quenching is performed in the NVT ensemble) or to an arbitrary value (if the quenching is performed in the NPT ensemble). Note that, when creating the initial configuration, care must be taken to avoid any unrealistic atom overlap that might cause spurious fast atom motions at the beginning of the simulation [Martínez et al. 2009]. This can be achieved by imposing a cutoff threshold when randomly placing the atoms, e.g., by ensuring that the distance between a pair of atoms is never lower than the sum of their radii or that a pair of cations (or anions) are never close to each other.

Second, the initial configuration must be melted at high temperature so as to fully lose the memory of the initial configuration. The choice of the melting temperature depends on the type of glass that is considered. On the one hand, the temperature must be high enough to ensure that the atoms move fast enough to “reset” the initial configuration or fully melt the initial crystal. On the other hand, a temperature that is too high might induce some spurious instabilities (e.g., due to the “Buckingham catastrophe” [Carré et al. 2008] or if the system approaches the vaporization point) and increase the length of the simulation (since, at fixed cooling rate, a higher initial temperature increases the duration of cooling). The fact that the initial melting is long enough to ensure that the system has lost the memory of its initial configuration can be checked by computing the intermediate scattering function (ISF) or, as an approximate rule of thumb, by ensuring that the atoms have, on average, diffused by at least half of the simulation box.

Third, the melt is quenched to the glassy state by decreasing temperature. This is typically achieved by linearly decreasing temperature over time, while other more complicated thermal routes can be considered (e.g., nonconstant cooling rate or step-by-step cooling). The cooling process can either be performed in the NVT or NPT ensemble. The NPT ensemble is usually desirable as it mimics the experimental synthesis of glass (i.e., wherein the melt is quenched under atmospheric pressure). In turn, the NVT ensemble can yield some spurious effects as, since density is fixed, the pressure changes as a function of temperature. As a result, when using the NVT ensemble during cooling, the glass tends to “freeze” under positive (compressive) pressure at the glass transition temperature, which can impact the resulting structure. However, some interatomic forcefields are unable to yield the correct final glass density, so that using the NVT ensemble and fixing the volume to achieve the experimental glass density might, in some cases, be necessary. Due to the high computing cost of MD, the cooling rate (typically 1014–109 K/s) is much faster than those achieved in conventional experiments (102–100 K/s) [Li et al. 2017]. From the viewpoint of PEL [Goldstein 1969; Wilkinson and Mauro 2021], the system starts, upon cooling, from a high-energy, ergodic liquid state and gradually evolves to some nonergodic, lower-energy state, before eventually getting trapped in a local minimum corresponding to a glassy state (see Figure 4b) [Debenedetti and Stillinger 2001]. At the end of the cooling process, it is also common to perform an additional relaxation run to ensure that the system has reached a plateau in energy, and volume or pressure (in the NPT and NVT ensembles, respectively).

Fourth, once formed, the glass can be investigated or subjected to additional simulations [Du 2019; Pedone 2009]. Typical investigations include various structural analysis (e.g., coordination number) [Bauchy 2014, 2012], mechanical properties (e.g., strain–stress curve) [Liu et al. 2019b; Yang et al. 2019], thermodynamics (e.g., potential energy) [Bauchy and Micoulaut 2015], and vibrational/dynamical properties (e.g., atom diffusivity) [Bauchy et al. 2013; Bauchy and Micoulaut 2011]. More details can be found in Levchenko et al. [2020], Massobrio [2015]. Note that any statistical analysis must be averaged over a trajectory that is long enough to filter out the effect of statistical fluctuations (the magnitude thereof depending on the size of the simulated system). It is also worth noting that, upon quenching, the glass may end up in notably different regions of the energy landscape [Allen and Tildesley 2017]. As such, it is a good practice to simulate a series of independent melt-quenching simulations for each glass (typically around 3 to 6) and to average results over these simulations.

2.4. Ab initio molecular dynamics simulations

2.4.1. First-principles calculation of interatomic interactions

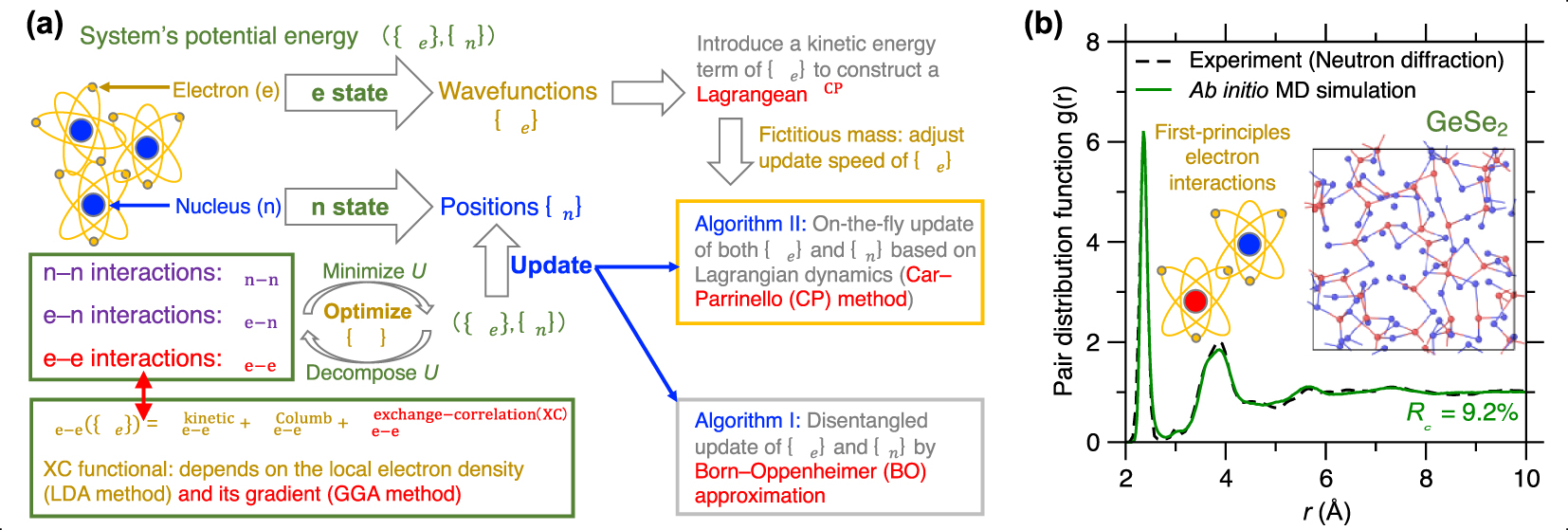

(a) Schematic illustrating the basic idea of ab initio molecular dynamics (MD) based on first-principles calculation of the system’s potential energy U (see text for details) [Boero et al. 2015]. (b) Comparison between the pair distribution function (PDF) g(r) computed by neutron diffraction experiment and ab initio MD simulation using the Car–Parrinello (CP) method and generalized gradient approximation (GGA) potential for a GeSe2 glass [Micoulaut et al. 2013]. The inset is a snapshot of the simulated GeSe2 glass.

Ab initio MD simulations estimate the interatomic interactions based on first-principles calculation of electron interactions within the framework of density function theory (DFT) [Kohn and Sham 1965; Marx and Hutter 2009; Parr 1980]. Figure 5a illustrates the basic idea of ab initio MD, wherein a glass system’s potential energy U is computed as a function of both the electron wave functions {𝛹e} (i.e., the electron state) and the nuclei positions {rn} (i.e., the nuclei state) [Hohenberg and Kohn 1964; Kohn and Sham 1965]. The potential energy U is calculated by summing up three types of interactions in the system [Boero et al. 2015; Marx and Hutter 2009]:

| (3) |

2.4.2. Choice of exchange-correlation (XC) potential

Ue−e is a key ingredient in first-principles simulation. It can be further described by three types of energy terms [Boero et al. 2015; Kohn and Sham 1965]:

| (4) |

2.4.3. Numerical algorithms

Based on all these formulations of first-principles interatomic interactions [Boero et al. 2015; Marx and Hutter 2009], the system’s ground-state potential energy U({𝛹e},{rn}) can be calculated via optimizing {𝛹e} so as to minimize U for the current nuclei positions {rn} (see Figure 5a) [Hafner 2008; Pople et al. 1989]. After updating the new nuclei positions {rn} after a small timestep, the system’s potential energy U is then recomputed through optimizing {𝛹e} [Boero et al. 2015]. Two algorithms have been developed to optimize {𝛹e} and update {rn} [Niklasson et al. 2006], viz., (i) the Born–Oppenheimer (BO) approximation to disentangle the updates of {𝛹e} and {rn} [Boero et al. 2015; Niklasson et al. 2006], wherein {𝛹e} is recomputed at each timestep and offers an accurate estimation of the potential energy U to update the nuclei positions {rn}, and (ii) the Car–Parrinello (CP) method to construct a Lagrangian LCP({𝛹e},{rn}) that updates both quantities on-the-fly based on Lagrangian dynamics [Car and Parrinello 1985], where LCP includes a constructed kinetic energy term of {𝛹e} by assigning to {𝛹e} a fictitious electronic mass that dictates the update inertia of {𝛹e} [Boero et al. 2015]. In practice, systems with slow change of nuclei positions tend to require a large fictitious mass to delay the update of {𝛹e} as well as a large timestep to accelerate the update of {rn} [Car and Parrinello 1985]. In other words, when running a CP-MD simulation, one needs to select a proper set of timestep and fictitious mass large enough to (i) reduce the computation cost of the update of {𝛹e} and {rn} but also small enough to (ii) numerically conserve the system’s energy and linear/angular momentum [Boero et al. 2015]. More details can be found in Boero et al. [2015], Marx and Hutter [2009].

2.4.4. Applications and limitations

Since ab initio MD simulations are typically able to accurately describe interatomic interactions [Pople et al. 1989], they are the method of choice to simulate complex glasses for which no robust empirical forcefields are available (see below). This is typically the case for glasses exhibiting complex bonds (i.e., with a mixed ionic/covalent/metallic character or varying electronic delocalization) or flexible local structures (e.g., varying coordination numbers) [Massobrio 2015]. For example, chalcogenide glasses (e.g., Ge–Se glasses) typically exhibit complex structural features that cannot be accurately reproduced by classical MD [Mauro and Varshneya 2006], including under- and over-coordinated atoms, edge-sharing structures, and homopolar bonds (see insert of Figure 5b) [Petri et al. 2000]. Figure 5b shows a melt-quenched GeSe2 glass prepared by ab initio MD simulation, wherein its computed PDF g(r) is compared with neutron diffraction data [Micoulaut et al. 2013]. Relying on CP-MD method and GGA-XC potential [Car and Parrinello 1985; Perdew et al. 1996a], the simulation offers a glass structure that is in excellent agreement with experimental data [Micoulaut et al. 2009].

However, despite their unparalleled predictive power [Pople et al. 1989], ab initio simulations still come with a series of limitations. First, although they rely on a fundamental description of electronic interactions, they still rely on a number of assumptions (e.g., choice of the pseudopotential and exchange-correlation function). Second, ab initio MD simulations come with a computational cost that is orders of magnitude larger than that of classical MD [Marx and Hutter 2009]. This limits the timescale that is accessible to ab initio MD simulations (typically to 100s of ps) [Boero et al. 2015]. This restricts their use to high cooling rates, which, in turn, tends to yield glasses that are more disordered (higher fictive temperature) than their experimental counterparts [Li et al. 2017]. Finally, the computational cost of ab initio MD simulations typically scales with the cube of the number of electronic degrees of freedom [Hafner 2008]. As a result, ab initio MD simulations are usually limited to very small systems (up to a few hundred atoms) [Massobrio 2015]. This prevents their use in capturing small compositional effects (since replacing one atom by another largely affects the overall composition in a small system) or extended structural features (e.g., large rings or compositional heterogeneity) [Du and Corrales 2006; Nakano et al. 1994].

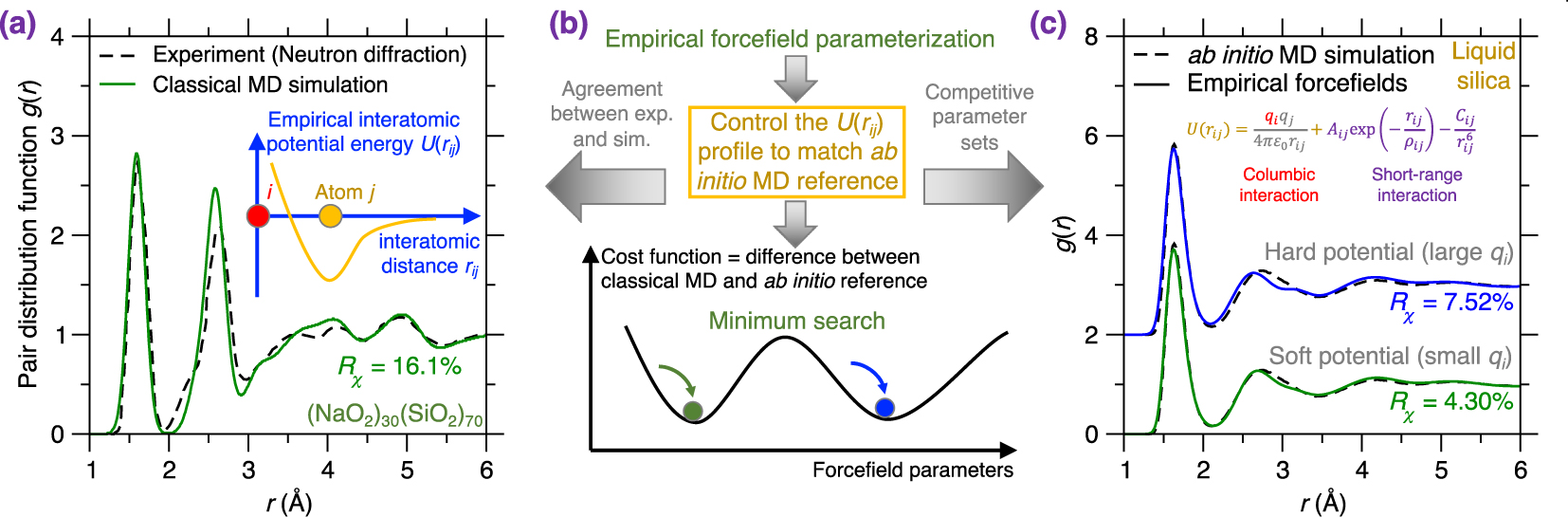

(a) Pair distribution function (PDF) g(r) of a melt-quenched (Na2O)30(SiO2)70 glass prepared by a classical MD simulation using an empirical forcefield (here, a Buckingham potential [Cormack et al. 2002], see (5)) and a cooling rate of 0.001 K/ps [Zhou et al. 2020]. The neutron diffraction data is added as a comparison [Wright et al. 1991]. The inset illustrates the shape of this empirical forcefield, i.e., the interatomic potential energy U(rij) as a function of interatomic distance rij [van Beest et al. 1990]. (b) Schematic illustrating the parameterization of an empirical forcefield by searching for the minimum position (i.e., the optimal forcefield) in a cost function landscape as a function of forcefield parameters [Carré et al. 2016]. The cost function is defined herein as the difference between classical MD result and its ab initio reference [Liu et al. 2019e]. The optimal forcefield is parameterized so as to match ab initio MD simulation and also offers a good agreement with experiment (see panel (a)) [Carré et al. 2008]. Note that several competitive minima (yielding competitive optimal forcefields) can coexist in the cost function landscape (see panel (c)) [Liu et al. 2020a]. (c) Comparison between the PDFs computed by ab initio MD simulation and classical MD simulation using two distinct Buckingham forcefields (with different parameterizations) for a silica liquid. The two potentials are referred to as “soft” (exhibiting weak columbic interactions) and “hard” (exhibiting more intense columbic interactions) [Liu et al. 2020a].

Thanks to their accuracy, ab initio MD simulations have been applied to predict the structure and dynamics of various types of glasses or amorphous solids, including amorphous silicon [Car and Parrinello 1988], chalcogenide [Micoulaut et al. 2013], Ge–Sb–Te phase-change materials [Caravati et al. 2009], MOFs [Darby et al. 2020; Sillar et al. 2009], silicates [Baral et al. 2017; Tilocca and de Leeuw 2006], borates [Scherer et al. 2019], etc. Notably, by accurately describing the interatomic interactions, ab initio MD offers a powerful tool to simulate complex glasses that cannot be properly described by empirical forcefields.

2.5. Classical molecular dynamics simulations

2.5.1. Empirical forcefields

In contrast to the explicit description of electronic effects offered by ab initio MD [Marx and Hutter 2009], classical MD relies on empirical forcefields to describe interatomic interactions—via physics/intuition-based, computationally efficient functionals [Massobrio 2015]. For example, Figure 6a shows the PDF g(r) of a melt-quenched (Na2O)30(SiO2)70 glass prepared by classical MD simulation using an empirical forcefield [Cormack et al. 2002; Du and Cormack 2004]. The neutron diffraction data is added as a reference for comparison [Wright et al. 1991]. Notably, this empirical forcefield offers an atomistic structure that is in good agreement with experimental data. Note that the simulated and experimental glasses are prepared with different cooling rate [Li et al. 2017]. The good agreement between simulated and experimental PDFs (see Figure 6a) suggests that, although the empirical forcefield is a simplification of the real interatomic interactions [Huang and Kieffer 2015; van Beest et al. 1990], it can accurately describe them while relying on a significantly reduced computational cost as compared to first-principles calculations [Bauchy et al. 2013; Carré et al. 2008]. Thanks to its much faster execution time as compared to ab initio MD simulation, classical MD simulation can extend to much longer timescales (up to a few nanoseconds) and larger length scales (up to millions of atoms) [Lane 2015; Plimpton 1995a].

2.5.2. Forcefield functionals

The optimal functional form of the empirical interatomic potential depends on the type of glass that is simulated (e.g., ionic, covalent, metallic glass, etc.) and no “universal” empirical potential is available to date [Du 2015; Huang and Kieffer 2015].

In practice, ionocovalent glasses (e.g., silicate glasses, which feature ionocovalent bonds [Huang and Kieffer 2015]) can be well described by a combination of: (i) long-range coulombic interactions and (ii) a Buckingham-format functional description of short-range interactions to compute the potential energy U(rij) between atom i and atom j at a distance rij (see inset of Figure 6a) [Carré et al. 2008; Du and Corrales 2006; van Beest et al. 1990]:

| (5) |

Note that, in the case of interactions that rapidly converge to zero upon increasing distance (e.g., Van der Waals interactions, which is proportional to 1/r6), the energy error associated with the fact of using a finite cutoff converges to zero as the cutoff increases. However, this is not the case for Coulombic interactions, which exhibit a slow decrease upon increasing distance r (i.e., proportional to 1/r). In such a case, the energy contribution that is neglected when using a cutoff (arising from long-distance interactions between atoms) does not converge to zero upon increasing cutoff. As such, long-range Coulombic interactions (including across PBC) must be explicitly considered to ensure the accuracy of the simulation. In practice, computing Coulombic interactions is often the most computationally expensive step when simulating ionocovalent glasses. In that regard, various summation methods have been developed to speed up the computation of electrostatic interactions, such as the Ewald method [Ewald 1921], particle–particle–particle–mesh (PPPM) method [Hockney and Eastwood 1988], and damped-shifted-force (DSF) method [Fennell and Gezelter 2006]. In detail, both the Ewald and PPPM methods are based on the idea that the summation of long-range interactions can be efficiently calculated in the reciprocal space using a Fourier transform [Allen and Tildesley 2017]. Unlike the Ewald or PPPM methods, the DSF method is conducted in real space and is based on the rapidly converging summation of a short-range potential—whose cutoff is large enough—to approach the effective long-range Coulombic interactions [Fennell and Gezelter 2006]. In practice, both the Ewald and PPPM summation methods offer accurate calculations of the Coulombic interactions. In many cases, electrostatic interactions can also be reasonably well approximated by the DSF method [Carré et al. 2007; Fennell and Gezelter 2006], which, in turn, is significantly more computationally efficient than Ewald and PPPM since it does not involve any Fourier transform [Fennell and Gezelter 2006].

It is worth pointing out that, as the accuracy of classical MD simulations significantly relies on the analytical formulation of the empirical forcefield, it is necessary, when developing empirical forcefield functionals, to account for all essential components of the interaction (e.g., Coulombic component of ionocovalent interaction) that affect the targeted glass properties. Indeed, although select properties are not very sensitive to the details of a forcefield (e.g., density or PDF), other properties can be strongly affected by small variations in the parameters or analytical formulation of the empirical potential. In such cases, it may be necessary to develop advanced forcefields that are able to capture some complex chemical or physical behaviors, including bond formation/breaking, charge transfers, polarization effects, short-range repulsion, etc. [Jahn et al. 2006; Jahn and Madden 2007; Serva et al. 2020]. In that regard, some advanced forcefields such as the aspherical ion model (AIM)—which is more complex than the Buckingham potential [Liu et al. 2020a]—have been developed to account for these essential, complex interaction behaviors in studying the dynamics of complex oxides [Jahn et al. 2006; Jahn and Madden 2007; Serva et al. 2020]. Note that, although such potentials are more computationally expensive, they generally show an improved level of agreement with experiments and a high transferability upon composition, temperature, and pressure changes [Jahn et al. 2006; Jahn and Madden 2007; Serva et al. 2020].

In contrast to ionocovalent glasses, covalent glasses (e.g., amorphous silicon [Stillinger and Weber 1985] or chalcogenide glasses [Micoulaut et al. 2013]) cannot be described by 2-body interatomic potentials due to the directional nature of the covalent bonds they form [Phillips 1981, 1979]. As such, describing covalent glasses requires the use of 3-body potential interactions—e.g., Stillinger–Weber (SW) potential [Ding and Andersen 1986; Stillinger and Weber 1985]—to constraint the values of the interatomic angular interactions between a central atom i and its two neighbor atoms j and k [Du 2015; Mauro and Varshneya 2006]:

| (6) |

Finally, the simulation of metallic glasses typically requires the description of many-body effects [Pelletier and Qiao 2019; Sheng et al. 2006], which can be well described by the Embedded Atom Method (EAM) potential [Daw et al. 1993]. In the EAM approach, the potential energy Ui of a central atom i is formulated as [Daw and Baskes 1984, 1983]:

| (7) |

Note that, to reduce the computational cost associated with the calculation of the empirical forcefields, a cutoff rc is typically adopted—wherein the interaction energy between a pair of atoms is assumed to be zero if their distance exceeds the cutoff [Chialvo and Debenedetti 1990; Du 2015]. In addition, a neighbor list algorithm is typically adopted to reduce the number of times the distance between a pair of atoms is calculated [Allen and Tildesley 2017; Leach 2001].

2.5.3. Forcefield parameterization

In addition to the choice of its functional form [Du 2015], the accuracy of an empirical forcefield strongly depends on its parameterization [Sundararaman et al. 2020, 2018; Wang et al. 2018]. Figure 6b illustrates the general idea behind the parameterization of an empirical potential. Such parameterization can be formulated as an optimization problem, wherein the forcefield parameters are optimized so as to minimize a given cost function. This problem can be illustrated in terms of a cost function landscape, which represents the topographical evolution of the cost function as a function of the forcefield parameters. The cost function captures the level of mismatch between a simulated metric and a given reference value. The simulated metric can be the interatomic energy/force, some structural features (e.g., PDF), or some other macroscopic properties (e.g., density, stiffness, etc.).

For each type of metric, reference values can be offered by experimental data or ab initio simulations. On the one hand, defining the cost function in terms of the level of mismatch between computed and experimental data tends to yield a good agreement between simulated and experimental glass properties—since the parameterization scheme specifically aims to minimize this difference. However, on the other hand, it is not always meaningful to compare a simulated glass with its experimental counterpart. Indeed, glasses simulated by MD are prepared with a cooling rate that is significantly higher than those experienced in traditional experiments and, therefore, should be different (typically more disordered) than their experimental counterparts [Carré et al. 2016; Li et al. 2017; Vollmayr et al. 1996b]. Hence, parameterizing an empirical forcefield so as to “force” a simulated glass to exhibit properties that match experimental data may yield an unrealistic forcefield. That is, forcing simulated and experimental glasses to feature similar properties can typically only be achieved by “mutual compensations of errors”, that is, wherein the forcefield is deformed so as to compensate the fact that the simulated glass is prepared with an extremely high cooling rate. As such, although such parameterization may offer an apparent agreement between simulations and experiments for the properties that are included in the cost function, the resulting forcefield, due to its nonphysical nature, may dramatically fail at predicting properties that are not included in its cost function. In contrast, parameterizing a forcefield so as to match with ab initio data is expected to yield a more realistic description of the true interatomic potential. However, glasses prepared with such a realistic forcefield should be compared to hyperquenched glasses (i.e., prepared under high cooling rate) and, hence, may not exhibit a good agreement with experimental data prepared under slower cooling rates.

In terms of the metric to be considered in the cost function, it has recently been suggested that, in the case of glasses or liquids, using a structural property as reference (e.g., PDF) tends to offer better results than directly forcing the parameterized forcefield to match reference force values (as computed by ab initio simulations) [Carré et al. 2016, 2008]. In the example shown in Figure 6c, the cost function R𝜒 is defined as the magnitude of the difference between the PDF g(r) computed by classical MD and ab initio MD for a liquid silica system [Liu et al. 2019e]. Note that parameterizing this forcefield based on liquid (rather than glassy) configurations effectively removes any spurious effects arising from the thermal history of the system.

Various optimization methods are available to “navigate” the cost function landscape, that is, to identify the optimal forcefield parameters that minimize the cost function [Carré et al. 2016], including MC search [Iype et al. 2013], Bayesian optimization [Liu et al. 2019c, e], particle swarm optimization [Christensen et al. 2021], or gradient descent search [Shewchuk 1994]. Note that this optimization problem is typically ill-defined since several degenerate sets of forcefield parameters can often minimize the cost function, that is, several competitive minimum positions can be found in the cost function landscape [Liu et al. 2020a, 2019c]. Figure 6c illustrates two competitive forcefields based on the same Buckingham-format functional [van Beest et al. 1990] (see (5)). These forcefields can be described as “soft” (featuring weak coulombic interactions) and “hard” (featuring more intense coulombic interactions) and both offer a competitive minimum value of the cost function R𝜒 for silica liquids [Liu et al. 2020a]. Nevertheless, despite the apparent harmony, the atomistic structures offered by the soft and hard potential exhibit pronounced differences for structural features that are not included in the cost function (e.g., bond angular distribution or ring size distribution) [Liu et al. 2020a]. This exemplifies the need to a posteriori validate forcefields that validate the accuracy of forcefields after their parameterization [Liu et al. 2019c].

Finally, it is worth pointing out that both the choice of forcefield functionals and forcefield parameterization can affect the forcefield’s transferability as a function of, for instance, glass composition, pressure, or temperature [Hernandez et al. 2019; Jahn and Madden 2007]. Indeed, forcefields are often developed for a specific glass composition and range temperature/pressure (e.g., liquid silica). In general, there is no guarantee that such forcefields can offer realistic predictions far from the conditions used during their training [Sundararaman et al. 2020, 2018]. For instance, certain chemical behaviors—e.g., charge transfers—may not be similar under various conditions of temperature or pressure and, hence, may require some adjustments in the parameterization of empirical forces to be properly accounted for [Liu et al. 2020a]. The transferability of forcefields is also likely to be poor when the forcefield is used under conditions wherein the atoms exhibit some local environments (e.g., varying coordination numbers) that are different from those observed during the training of the forcefield. This limitation is especially pronounced for borate glasses (wherein the average coordination number of boron depends on temperature) and pressurized glasses (wherein coordination numbers tend to increase under pressure) [Salmon and Zeidler 2015]. In order to overcome these limitations and develop transferable forcefields, one first needs to propose a forcefield functional that is complex enough to capture the nature of interatomic interactions in all the conditions of interest (e.g., Buckingham-format potential for ionocovalent bond interactions in silicate glasses), but generic enough to avoid any unrealistic extrapolations due to “overfitting” [Du 2015; Huang and Kieffer 2015]. Then, the transferability of a forcefield greatly depends on its forcefield parameterization scheme, where the cost function should be designed so as to consider the structure and properties of glasses under different conditions [Liu et al. 2019c; Sundararaman et al. 2018]. Note that there always exists a balance between (i) the ability of a forcefield to accurately predict the unique, detailed properties of a specific system and (ii) the capability of a forcefield to offer a robust transferability for a wide range of compositions and conditions [Liu et al. 2019c]. To address the issue of transferability, several recent works have focussed on developing generic forcefields that can be applied to a large compositional envelope at the expense of potentially being unable to capture the fine structural details of a specific composition [Hernandez et al. 2019; Jahn and Madden 2007], including the well-established Teter potential for modified silicate glasses [Du and Corrales 2006], the Wang–Bauchy potential for borosilicate glasses [Wang et al. 2018], etc.

Thanks to their high computational efficiency as compared to ab initio simulations [Carré et al. 2008; Huang and Kieffer 2015], classical MD simulations relying on empirical forcefields have been extensively used to model glasses [Cormier et al. 2003; Mead and Mountjoy 2006; Tanguy et al. 1998]. Simulated systems include glassy silica [Carré et al. 2008; Liu et al. 2020a; van Beest et al. 1990], modified (e.g., alkali) silicate glasses [Cormack and Du 2001], aluminosilicate glasses [Bouhadja et al. 2013], borates [Kieu et al. 2011; Sundararaman et al. 2020; Wang et al. 2018], etc. It should be pointed out that, as the number of elements in the glass increases, it becomes extremely challenging to properly describe all the distinct interactions between pair of elements (since the number of parameters that need to be adjusted scales with the square of the number of elements) [Du 2015].

2.6. Reactive molecular dynamics simulations

2.6.1. Gap between ab initio and classical MD simulations

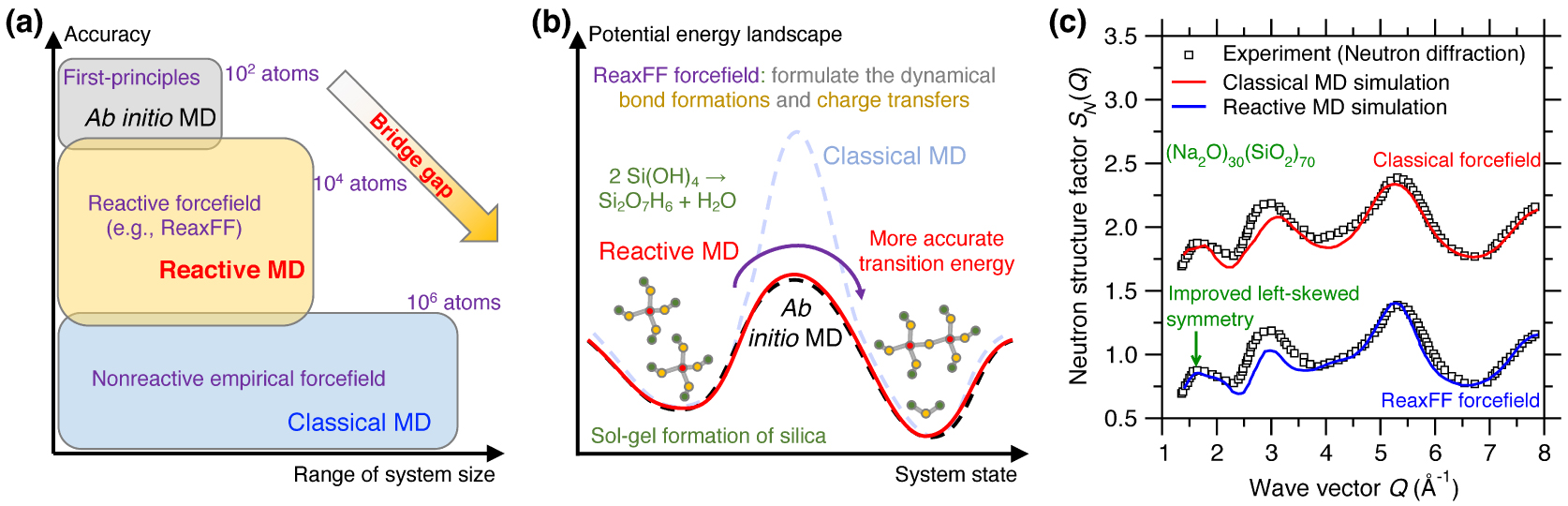

In contrast to classical MD, ab initio MD can accurately describe a chemical reaction process but is limited to small system size (up to hundreds of atoms) [Boero et al. 2015], while, in turn, classical MD can extend to large systems (up to millions of atoms) but lack an accurate description of chemical reaction processes [Plimpton 1995a; Senftle et al. 2016]. Although classical MD simulations exhibit high computation efficiency [Marx and Hutter 2009; Massobrio 2015], they rely on simplified empirical forcefields, which may accurately predict certain properties (e.g., PDF g(r)) of glasses but may not offer robust predictions for more complex properties that are more sensitive to the details of the forcefields [Ganster et al. 2007; Ispas et al. 2002]. In addition, classical MD simulations relying on static forcefields and fixed charges are often unable to robustly account for charge transfer mechanisms and defective coordinated states, which prevents such simulations from properly describing the breaking and formation of bonds during chemical reactions [Buehler et al. 2006; Deng et al. 2021; Du et al. 2019a; Yu et al. 2018]. To address this limitation, reactive MD simulations—i.e., MD relying on reactive forcefields (e.g., ReaxFF) [van Duin et al. 2003, 2001]—have been proposed as an intermediate option, in between classical and ab initio MD. The promise of reactive MD is to offer an accuracy that approaches that of ab initio MD while involving a computational burden that is more comparable to that of classical MD. This makes it possible for reactive MD to simulate fairly large systems (up to tens of thousands of atoms) over extended time scales (up to a few nanoseconds) with an enhanced accuracy as compared to classical MD (see Figure 7a) [Senftle et al. 2016]. However, reactive forcefields typically rely on hundreds-to-thousands of parameters and, hence, are extremely challenging to parameterize. This has thus far limited their applications to a small number of glass families [Senftle et al. 2016].

(a) Illustration of reactive molecular dynamics (MD) simulations relying on reactive forcefield (e.g., ReaxFF) to bridge the gap between ab initio and classical MD, both in terms of range of system size and accuracy [Senftle et al. 2016]. (b) Illustration of the sol–gel formation process of glassy silica in terms of the potential energy landscape (PEL) predicted by ab initio MD, reactive MD, and classical MD [Du et al. 2018; Steinfeld et al. 1999]. Using the ab initio PEL as a reference, reactive MD shows a more accurate transition energy than classical MD [Du et al. 2018], on account of the fact that the ReaxFF forcefield explicitly models dynamical bond formations and charge transfers during the chemical reaction [van Duin et al. 2003]. (c) Comparison between the neutron structure factor SN(Q) computed by classical MD and reactive MD for a sodium silicate glass ((Na2O)30(SiO2)70) [Yu et al. 2017a]. The same experimental SN(Q) is added as a reference [Grimley et al. 1990].

2.6.2. ReaxFF forcefield

The ReaxFF forcefield is one of the most popular implementations of reactive MD [Leven et al. 2021]. As detailed in the following, the key advantages of ReaxFF are that it (i) explicitly describes the dynamical formations and breaking of bonds, (ii) accounts for charge transfers between atoms, and (iii) dynamically adjusts the interatomic interactions as a function of the local environment of each atom [van Duin et al. 2003, 2001]. This makes it possible to describe phase transitions, defect formations, or chemical reactions between atoms (e.g., to simulate the reactivity of a glass surface with water) [Buehler et al. 2006; Du et al. 2018].

In detail, the ReaxFF forcefield calculates the system’s potential energy Usys by summing up the following energy terms [van Duin et al. 2003]:

| (8) |

2.6.3. Glass reactivity

Reactive MD simulations offer an ideal tool to investigate the chemical reactivity of glasses [Senftle et al. 2016]. For instance, Figure 7b illustrates the sol–gel formation process of glassy silica (in terms of the condensation of Si(OH)4 precursors) from the viewpoint of an energy state transition in PEL [Du et al. 2018; Steinfeld et al. 1999]. Classical MD relying on empirical forcefield (e.g., Buckingham potential [van Beest et al. 1990]) generally offers an accurate description of near-equilibrium properties (i.e., around local minimum energy states) but typically fails at properly describing far-from-equilibrium behaviors like those experienced in transition states [Liu et al. 2019e]. In particular, classical MD typically does not offer realistic energy barrier predictions [Du et al. 2018]. In contrast, reactive MD (e.g., based on ReaxFF) can offer more accurate description of transition energy barriers, which makes it possible to simulate sol-to-gel transitions [Du et al. 2019b, 2018; Zhao et al. 2021, 2020]. Reactive potentials also make it possible to model the reactivity of glass with aqueous solutions [Deng et al. 2019; Du et al. 2019a; Fogarty et al. 2010; Mahadevan and Du 2020; Yu et al. 2018]. The description of bond formation/breaking also make reactive MD simulations an ideal tool to study fracture processes [Bauchy et al. 2016, 2015; To et al. 2020] or vapor deposition processes [Wang et al. 2020].

2.6.4. Glass structure

The fact that ReaxFF can dynamically adjust interatomic interactions as a function of the local environment of each atom is also a key advantage in glass simulations—since glasses can exhibit a large variety of local structures (e.g., varying coordination states). As such, when properly parameterized, reactive forcefields have the potential to offer an improved description of the atomic structure of glasses as compared to classical MD [Yu et al. 2017a, 2016]. Figure 7c shows a comparison between the neutron structure factor SN(Q) computed by classical and reactive MD simulation for a (Na2O)30(SiO2)70 glass [Yu et al. 2017a]. Taking the experimental SN(Q) as a reference [Grimley et al. 1990], reactive MD relying on the ReaxFF forcefield offers an improved description of the medium-range structure (e.g., improved left-skewed symmetry of SN(Q) at low-Q region [Yu et al. 2017a]) as compared to that offered by classical MD relying on a Buckingham forcefield [Du and Cormack 2004]. The ability of ReaxFF to properly handle coordination defects also makes it an ideal tool to study irradiation-induced vitrification [Krishnan et al. 2017a, c; Wang et al. 2017].

Although its application to glassy systems has thus far remained fairly limited, the ReaxFF forcefield has been used to model several noncrystalline systems, including amorphous silicon [Buehler et al. 2006], glassy silica [Yu et al. 2016], sodium silicate glasses [Deng et al. 2020], modified aluminosilicate glasses [Dongol et al. 2018; Liu et al. 2020c; Mahadevan and Du 2021], organosilicate glasses [Rimsza et al. 2016], and zeolitic imidazolate frameworks (ZIFs) [Yang et al. 2018], etc. Despite the unique advantages offered by ReaxFF, its applications are presently limited by the range of elements that have been parameterized [Leven et al. 2021; Senftle et al. 2016].

3. Grand challenges in atomistic simulations of glasses

3.1. Parameterization of empirical forcefields

3.1.1. High dimensionality

Empirical forcefields involve many parameters that need to be optimized [Liu et al. 2019c]. For example, ReaxFF forcefields generally comprise hundreds (or thousands) of parameters [van Duin et al. 2003]. Although classical forcefields are usually simpler, they still involve dozens of parameters—a number that increases upon increasing number of elements [Huang and Kieffer 2015]. Such high dimensionality makes it challenging to identify optimal values for the parameters, that is, to reliably find the minimum position in the cost function landscape [Carré et al. 2016; Shewchuk 1994].

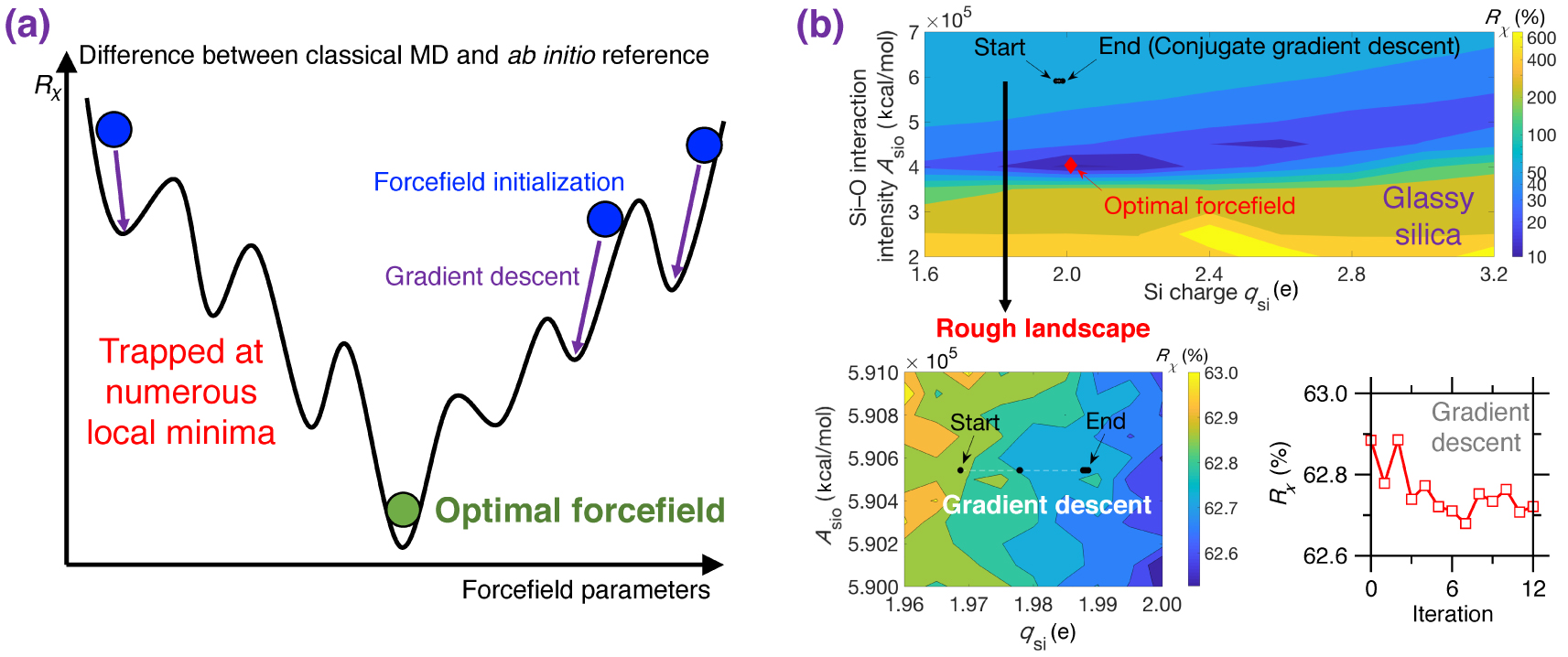

3.1.2. Roughness of cost function landscape

(a) Illustration of the rough nature of typical cost function R𝜒 landscapes for empirical forcefield parameterization. R𝜒 is defined herein as the difference between a classical MD result (e.g., PDF) and its ab initio reference [Liu et al. 2019e]. The outcome of a typical gradient descent optimization can easily get stuck at numerous local minima and, hence, strongly depends on the initial starting point [Liu et al. 2019e; Shewchuk 1994]. (b) Contour plot of the cost function R𝜒 associated with a Buckingham potential for glassy silica as a function of two forcefield parameters qsi and Asio (see (5)) [Liu et al. 2019e]. For illustration purposes, the other parameters are fixed based on the well-established van Beest–Kramer–van Santen (BKS) potential [van Beest et al. 1990]. By using conjugated gradient descent [Shewchuk 1994], the optimization easily gets trapped in a local minimum due to the rough nature of the cost function landscape (see bottom panels), so that the optimization fails to identify optimal forcefield parameters that minimize the cost function [Liu et al. 2019e].

Figure 8a illustrates a cost function landscape as a function of arbitrary forcefield parameters, where the cost function R𝜒 is defined herein as the magnitude of difference between the PDF g(r) computed by classical MD and its ab initio reference [Liu et al. 2019e]. Since the landscape is rough and features many local minima, traditional gradient-descent-based optimization algorithms are usually largely inefficient since they tend to get stuck in local minima—so that the outcome of the optimization strongly depends on the initial starting point [Shewchuk 1994].

As an illustrative example, Figure 8b shows the cost function landscape of a Buckingham forcefield for glassy silica as a function of two forcefield parameters (i.e., the silicon partial charge qsi and the short-range Si–O interaction intensity ASiO) [Liu et al. 2019e]. Even for this simple system, the cost function landscape is rough and exhibits several local minima. As a result, optimizations relying on conjugated gradient descent [Shewchuk 1994] tend to get easily stuck at the positions nearby the start point (see Figure 8b) [Liu et al. 2019e]. Indeed, although the cost function looks fairly smooth at a high level, a closer inspection reveals that the cost function locally features a very rough landscape showing a large number of local minima (see bottom panel in Figure 8b) [Liu et al. 2019e]. Overall, as a consequence of the rough nature of typical cost function landscapes, forcefield parameterization schemes are often strongly biased, heavily relying on intuition, or requiring a large number of independent optimization (with different starting points) [Liu et al. 2019e, c].

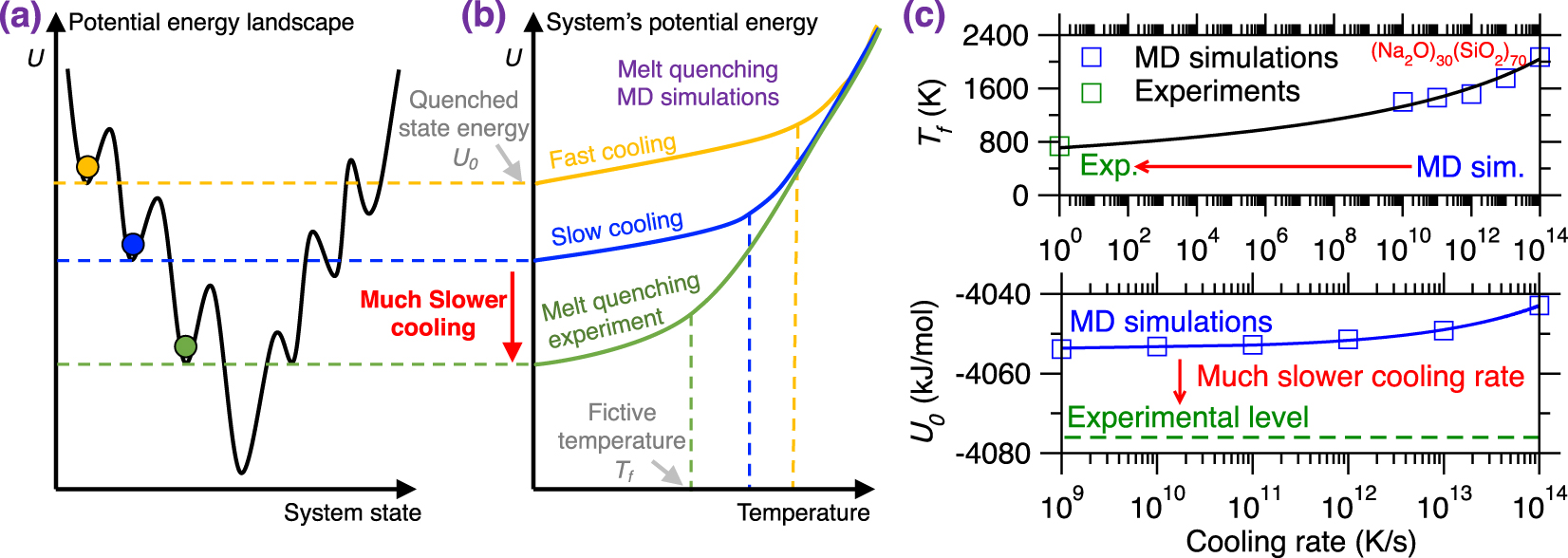

3.2. Effect of the cooling rate

3.2.1. Importance of thermal history

Due to the short timescale that is accessible to MD simulations (up to a few nanoseconds trajectories) [Plimpton 1995a], glass simulations relying on the melt-quenching approach are limited to ultrafast cooling rates (1014–109 K/s), which far exceed the cooling rates achieved in conventional experiments (102–100 K/s) [Li et al. 2017]. This is important since, glasses being out-of-equilibrium phases, their structure and properties depend on their thermal history. Specifically, glasses tend to reach deeper positions in their PEL upon decreasing cooling rates (i.e., they become more stable, see Figure 9a) [Debenedetti and Stillinger 2001].

(a) Illustration of different local minimum states in the potential energy landscape (PEL) that are reached by melt-quenched glasses upon different cooling rates [Debenedetti and Stillinger 2001]. Slower cooling rates result in more stable glasses, i.e., featuring a deeper position in the PEL. (b) Evolution of the potential energy U of a glass with respect to temperature during a melt-quenching molecular dynamics (MD) simulation under fast cooling (yellow) and slow cooling (blue) [Debenedetti and Stillinger 2001]. The energy profile of a glass subjected to a melt-quenching experiment (i.e., at much lower cooling rate) is added as a reference (green). (c) Fictive temperature Tf (upper panel) and final potential energy U0 (bottom panel) as a function of cooling rate for a (Na2O)30(SiO2)70 glass offered by both melt-quenching MD simulations (blue squares) and experiments (green squares) [Li et al. 2017; Zhou et al. 2020]. The lines are to guide the eyes.

Figure 9b illustrates the evolution of the potential energy U of a glass with respect to the decreasing temperature during melt-quenching with varying cooling rates: (i) MD simulation with a large cooling rate (typically 10 K/ps), (ii) MD simulation with a slow cooling rate (typically 10−3 K/ps), and (iii) experimental melt-quench (typically 10−12 K/ps) [Debenedetti and Stillinger 2001]. At high temperature, the three systems are at the equilibrium liquid state and, hence, exhibit the same potential energy U [Debenedetti and Stillinger 2001]. As temperature decreases, U decreases (the slope depending on the heat capacity of the liquid) and all three systems enter into the metastable supercooled liquid state following the same linear master curve [Debenedetti and Stillinger 2001]. From this point, the transition from the metastable supercooled liquid state to the out-of-equilibrium glassy state (i.e., at the glass fictive temperature Tf) occurs when the relaxation time of the system exceeds the observation time. Although, at fixed temperature, all the three systems exhibit the same relaxation time, the observation time is significantly lower in MD simulations, especially upon large cooling rate. As such, glasses simulated by MD using a large cooling rate enter the glassy state at higher temperature and remain stuck is high-energy states in the PEL (see Figures 9a and b) [Debenedetti and Stillinger 2001]. As a result, glasses simulated by MD strongly depend on the choice of the cooling rate and tend to be more stable (i.e., lower final energy U0, more ordered) upon decreasing cooling rates.

3.2.2. Gap between simulated versus experimental glasses

Figure 9c shows the evolution of the glass fictive temperature Tf and final potential energy U0 as a function of the cooling rate for both melt-quenching experiments and MD simulations—using the case of a (Na2O)30(SiO2)70 glass as an archetypical example [Li et al. 2017; Zhou et al. 2020]. As expected, slower cooling rates result in lower Tf and U0. However, due to the huge gap between the cooling rates that are accessible to experiments and simulations [Li et al. 2017], experimental values of Tf and U0 tend to be much lower than their simulation counterparts [Li et al. 2017; Zhou et al. 2020]. This difference in the order of the magnitude of the cooling rate is a critical limitation of MD simulations since it results in a systematic difference between experimental and simulated glasses [Li et al. 2017; Vollmayr et al. 1996b]. Note that different types of glasses may exhibit various dependence on the cooling rate, so that certain glasses are more sensitive to variations in the cooling rate than others [Liu et al. 2018]. It should also be noted that certain glass properties or structural features (e.g., medium-range order structure) are more sensitive to the cooling rate than others (e.g., short-range order structure) [Li et al. 2017].

3.3. Finite size effects

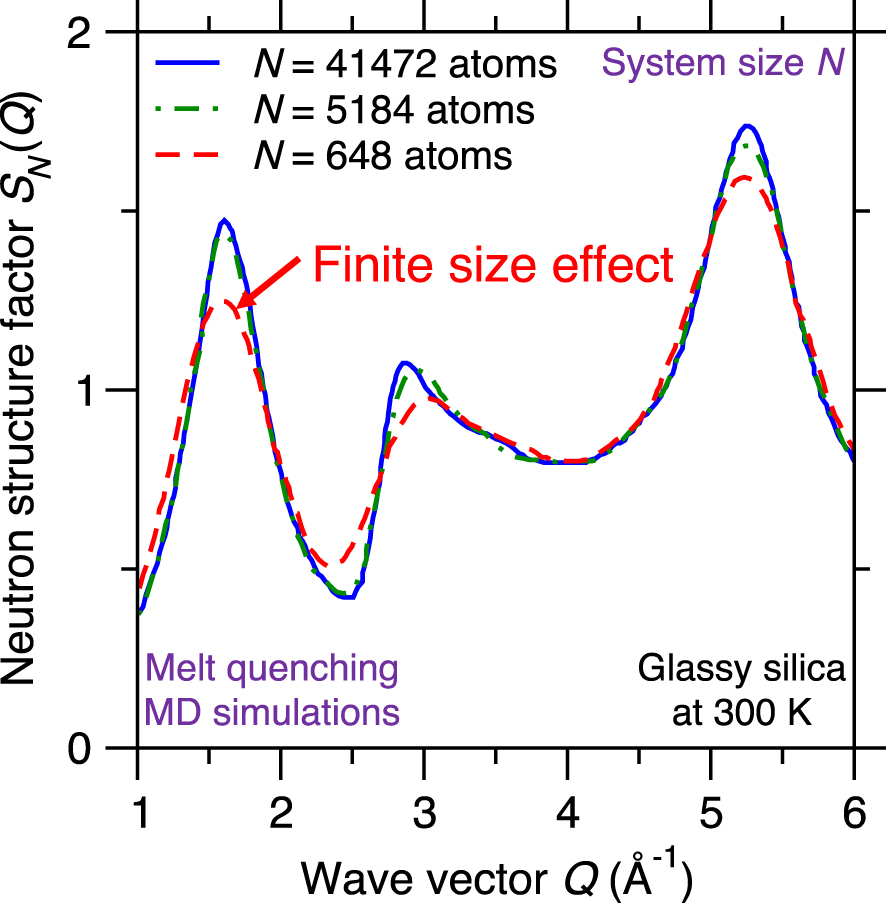

Neutron structure factor SN(Q) of silica glasses prepared by melt-quenching molecular dynamics (MD) simulations for varying system sizes, namely, N = 648, 5184, and 41,472 atoms [Nakano et al. 1994].

Due to their high computational cost, atomistic simulations are limited to fairly small systems (i.e., small number of atoms). Although PBC are typically used to mitigate any spurious effects arising from the presence of surfaces, the limited size of simulated glasses (from hundreds to millions of atoms) can affect the accuracy of the simulation [Du 2015]. Difficulties related to the system size include: (i) lack of statistical sampling if the number of atoms is too low [Berthier et al. 2012; Horbach et al. 1996], (ii) enhanced fluctuations in the thermodynamic properties of the simulated system (e.g., temperature or pressure) since the magnitude of fluctuations inversely scale with the square root of the number of atoms [Du 2019; Leach 2001], and (iii) systematic errors arising from the limited size of the simulation box (e.g., absence of large rings or extended collective vibrational modes) [Ganster et al. 2004; Nakano et al. 1994].

Figure 10 shows the computed neutron structure factor SN(Q) of a glassy silica system prepared by melt-quenching MD simulations while using three different system sizes N, namely, 648 (small system), 5184 (intermediate system), and 41,472 atoms (large system) [Nakano et al. 1994]. The computed structure factor SN(Q) is found to depend on the system size since, especially in the low-Q region (which captures the medium-range order structure of the glass)—before the structure factor eventually converges for large systems [Nakano et al. 1994]. This indicates that glass simulations relying on small simulation boxes (and, hence, small numbers of atoms) do not always properly capture the structure and properties of glasses [Du 2015; Ganster et al. 2004; Nakano et al. 1994]. In practice, one needs to select a system size that is large enough to mitigate finite size effects, but small enough to ensure a reasonable computational cost [Du 2015].

3.4. Limitations of reverse Monte Carlo (RMC) simulations

3.4.1. Need for structural data

Reverse Monte Carlo (RMC) simulations aim to construct atomistic structures that match one or several structural fingerprints provided by experiments (often, the PDF) [McGreevy 2001]. As such, RMC simulations offer an attractive alternative to MD simulations since such simulations can effectively bypass the melt-quench route to form a glass and, hence, are not affected by high cooling rate effects. However, RMC simulations largely rely on the availability (and accuracy) of experimental data. This limits the predictive power of RMC simulations since this approach does not make it possible to simulate in silico glasses that have not been experimentally synthesized and characterized yet. This also limits the range of conditions (temperature, pressure, etc.) to the ones that have already been experimentally explored.

3.4.2. Ill-defined nature of RMC simulations

(a) Illustration of the existence of competitive minima in the cost function landscape for a reverse Monte Carlo (RMC) simulation [McGreevy 2001]. The cost function R𝜒 is defined herein as the magnitude of difference between the simulated and experimental PDFs (see (1)) [Zhou et al. 2020]. Compared to the targeted minimum (green circle, which exhibits both low R𝜒 and potential energy U), some competitive minima (red sphere) exhibit the same value of the cost function R𝜒, but high values of U, i.e., they correspond to unstable energy states in the potential energy landscape (PEL) [Zhou et al. 2020]. (b) Illustration of atomic configurations that exhibit the same PDF g(r) while presenting notably different structures [Pandey et al. 2016b]. (c) Evolution of the cost function R𝜒 (upper panel) and potential energy U (bottom panel) as a function of the number of Monte Carlo (MC) search steps during an RMC simulation of a sodium silicate glass ((Na2O)30(SiO2)70) [Zhou et al. 2020]. The R𝜒 and U values obtained for the same glass prepared by melt-quenching molecular dynamics (MD) simulation with a cooling rate of 0.001 K/ps are added as references (green dash line) [Zhou et al. 2020].

RMC simulations adopt the MC algorithm to search for an atomic structure that exhibits a minimum in the cost function landscape (see upper panel of Figure 11a) [Allen and Tildesley 2017], wherein the cost function R𝜒 is defined as the magnitude of difference between the simulated structural fingerprints and their experimental references [Zhou et al. 2020]. This can be formulated as an optimization problem, wherein some degrees of freedom (i.e., the positions of the atoms) are adjusted so as to minimize a cost function. However, the optimization problem at the core of RMC simulations is intrinsically ill-defined. Indeed, although a given three-dimensional structure yields unique values for the fingerprints, a given fingerprint can be associated with a large number of different three-dimensional structures. That is, the structure–fingerprint relationship is not reversible. For instance, due to the complex, disordered nature of glasses [Bunde and Havlin 2012], very different structures can be associated with the same PDF (see Figure 11b) [Pandey et al. 2016b]. This is a consequence of the fact that the PDF is simply a one-dimensional signature of a glass structure (averaged over various types of elements) and, therefore, only offers a highly compressed representation of a three-dimensional structure—which does not contain enough information to robustly reconstruct the structure itself. In short, RMC simulations do not exhibit a single solution, which manifests itself by the fact that the cost function landscape exhibits various competitive minima [Pandey et al. 2015]. Since traditional RMC simulations do not embed any knowledge of the interatomic interactions, the structures generated by RMC may not be thermodynamically stable [Zhou et al. 2020] and the regions of the PEL that are explored in RMC simulations may be very different from those that are accessed during the melt-quenching of a glass (see bottom panel in Figure 11a) [Pandey et al. 2015].

Figure 11c shows the evolution of the optimization cost function R𝜒 and the potential U with respect to the number of MC search steps during an RMC simulation for a (Na2O)30(SiO2)70 glass [Zhou et al. 2020]. Note that the values of R𝜒 and U obtained for the same glass prepared by a melt-quenching MD simulation are also added as references [Zhou et al. 2020]. As expected, R𝜒 gradually decreases and eventually becomes lower than the MD reference, that is, the RMC simulation offers an optimal structure that indeed matches the experimental structural fingerprints (see upper panel of Figure 11c) [Zhou et al. 2020]. However, upon RMC refinement, the potential energy U is not monotonically decreasing but, rather, tends to increase in the late stages of the MC search. Eventually, the final glass structure exhibits a notably higher potential energy U than that of the MD-simulated glass reference (see bottom panel of Figure 11c) [Zhou et al. 2020]. These results exemplify that an apparent excellent agreement with experimental data (e.g., based on the PDF) does not always translate into a realistic glass structure [Pandey et al. 2015; Zhou et al. 2020]. The ill-defined nature of RMC can be partially mitigated by the introduction of an energy penalty term U in the MC cost function to favor structures featuring low potential energy—an approach that is typically referred to as hybrid RMC [Bousige et al. 2015; Jain et al. 2006; Opletal et al. 2002]. However, hybrid RMC requires trial-and-error tests or intuition to adjust the weights of R𝜒 and U in the cost function. In addition, hybrid RMC simulations are significantly more computationally expensive than traditional RMC simulations since the potential energy of the system U must be computed at each MC step [Zhou et al. 2020].

4. New developments in atomistic modeling of glasses

4.1. Machine-learned forcefields

4.1.1. Types of machine-learned forcefields