1 The concept of feedback circuit

Regulation can be defined as the process that adjusts the rate of production of the elements of a system to the state of the system itself and of relevant environmental constraints. In order to realise these adjustments, the system must comprise a network of interactions that allows its machinery to be aware of the level of the elements that have to be synthesised or degraded.

When one thinks of biological regulation, one almost always refers to the mechanisms involved in homeostasis, which stabilises our body temperature, blood pressure, etc., and induces the concentrations of hormones to oscillate, each with its own periodicity. Homeostasis functions like a thermostat and tends to keep the level of the variables (with or without oscillations) at or near a supposedly optimal value, usually far from both the lower boundary level that would prevail if the machinery were maintained off and from the higher boundary level that would prevail if it were kept on without control.

There is, however, another type of regulation, which, instead of forcing a level to remain in an intermediate range, forces it, on the contrary, to a lasting choice between two extreme boundaries. In biology, the most obvious example is found in the crucial process of cell differentiation. It has become clear for some time that in higher living beings each cell (with few exceptions) contains all the genes of the organism. Different cell types do not differ by which genes they posses, but by which of their genes are ‘on’ and which are ‘off’: one says that differentiation is due to ‘epigenetic’ differences. As first suggested by Delbrück [1], epigenetic differences, including those involved in cell differentiation, can be understood in terms of a more general concept, well-known to chemists and physicists, multistationarity. More concretely, a given cell can persist lastingly in any of many steady states, which differ essentially from each other by the genes that are on and those that are off.

A proper regulation of the operation of a system is achieved by feedback circuits, which will be the main subject of this paper. The concept of feedback circuits was developed long ago by biologists as sets of oriented cyclic interactions. If x1 acts on x2, which acts on x3, which in turn acts on x1, one says that there is a feedback circuit, or, for short, a circuit x1→x2→x3→x1 (the word ‘feedback loop’ is more frequently used by biologists; we prefer using ‘feedback circuit’, because in graph theory ‘loop’ is used only for one-element circuits). In a circuit, each element exerts a direct action on the next element in the circuit, but also an indirect action on all other elements, including itself. More concretely, the present concentration of an element exerts, via the other elements of the circuit, an influence on the rate of synthesis of this element, and hence on its future level.

There are two types of feedback circuits. Although this may not be obvious, either each element of a circuit exerts a positive action (activation) on its own future evolution, or each element exerts a negative action (repression) on this evolution. Accordingly, one speaks of a positive or of a negative circuit, respectively. Whether a circuit is positive or negative simply depends on the parity of the number of negative interactions in the circuit; a circuit with an even number of negative interactions is positive, while if this number is odd the circuit is negative. The sign of a circuit is thus defined as (−1)q, where q is the number of negative interactions in the circuit.

The properties of the two types of feedback circuits are strikingly different. As a matter of fact, they are responsible, respectively, for the two types of regulation described above. A negative circuit can function like a thermostat and generate homeostasis, with or without oscillations. Positive circuits can force a system to choose lastingly between two or more states of regime; they generate multistationarity (or multistability). This contrasted behaviour of the two types of circuits can be justified without any difficulty if one formalises the circuits in terms of systems of ordinary differential equations or by ‘logical’ methods [2,3].

The above points to the notion that negative circuits are involved in homeostasis and positive circuits in multistationarity. However, it has progressively become apparent that one can be much more precise. It was conjectured by Thomas [4] that (i) a positive circuit is a necessary condition for multistationarity and (ii) a negative circuit is a necessary condition for stable periodicity. These statements were further analysed and subject to formal demonstrations [5–9].

To briefly come back to the biological role of circuits, we would like to point out that the regulatory interactions found in biology (and as well in other disciplines) are almost invariably non-linear, and most often of a sigmoid shape. For sigmoid or stepwise interactions, a positive circuit can generate three, and only three, steady states, one of them unstable and the two others stable, whatever the number of elements in the circuit. In this perspective, one can wonder how living systems could succeed in generating the many steady states required to account for the many cell types observed in higher organisms. The answer is simple. In order to have many steady states, one needs several positive circuits. For sigmoid or stepwise nonlinearities, m positive circuits can generate up to 3m steady states, 2m of which stable. Thus, eight genes, each subject to positive autocontrol, would suffice to account for 28=256 different cell types.

2 Description of circuits in terms of the elements of the Jacobian matrix

When a system is described in terms of ordinary differential equations, circuits can be defined in terms of the elements of the Jacobian matrix of the system (see, for example, [5,9,10]). The idea of describing the interactions in complex systems in terms of the signs of the terms of the Jacobian matrix had been described already long ago by the economists Quirck and Ruppert [11], without, however, explicit reference to feedback circuits, and by May [12] and Tyson [13].

Consider the Jacobian matrix of a system of ordinary differential equations (ODE's). If term aij=(∂fi/∂xj) is non-zero, it means that variations of variable j influence the time derivative of variable i. In this case, we say for short that variable j acts on variable i and we write xj→xi. This action is defined as positive or negative according to the sign of aij.

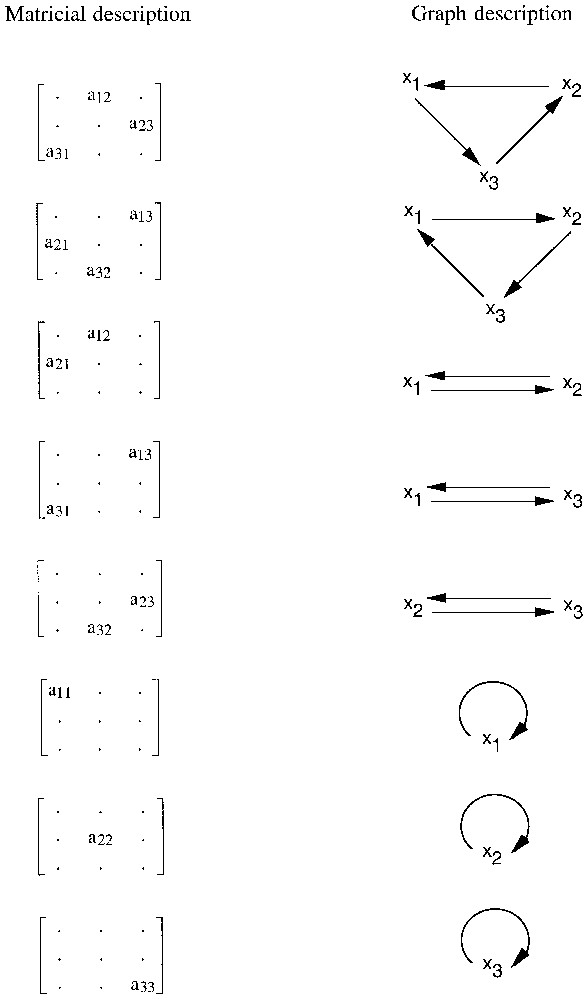

Consider now a sequence of non-zero terms of the Jacobian matrix, such as a13, a21, a32, in which the i(row) indices 1, 2, 3 and the j (column) indices 3, 1, 2 are circular permutations of each other. Non-zero a13 means x3→x1, non-zero a21 means x1→x2 and non-zero a32 means x2→x3; thus, we have the three-element circuit x1→x2→x3→x1. In this way, all the circuits that are present in a system can be read on its Jacobian matrix. Fig. 1 shows the circuits that are possible for a three-variable system.

The circuits in three-variable systems.

Note that the matricial and graph descriptions of circuits are dual of each other: a non-zero element of the matrix corresponds to an arrow (not a vertex) of the graph. The circuit itself can be symbolised either by the graph or by the product of the relevant terms of the matrix.

Unions of disjoint circuits are sets of circuits that fail to share any variable. For example, if terms a12, a21 and a33 are non-zero, we have a union of two disjoint circuits: a two-element circuit between variables x1 and x2 and a one-element circuit involving variable x3. A general and elegant definition suggested by M. Cahen (personal communication) applies both to circuits and unions of disjoint circuits. A circuit or union of disjoint circuits can be identified by the existence of a set of non-zero terms of the matrix, such that the sets of their i (row) and j (column) indices are equal. The equality of the two sets of indices can be checked, for example, for the sets of terms: (a three-element circuit), (a two-element circuit), a11 (a one-element circuit), or (a union of two disjoint circuits).

Those circuits and unions of disjoint circuits that involve all the variables of the system play a special role in the relation between circuits and steady states. For this reason, we show them here (Fig. 2) in the case of three-variable systems. For the sake of brevity, we will call them ‘full circuits’, irrespective of whether they are circuits or unions of disjoint circuits. In fact, there is a very simple algorithm for extracting all the full circuits from the Jacobian matrix: it simply consists of computing the determinant of the Jacobian matrix. This determinant is a sum of products, each of which corresponds to one of the ‘full circuits’ of the system. For example, for a two-variable system the determinant of the Jacobian matrix is (a11 a22 ) and the two full circuits are the two-element circuit symbolised by the product and the union of two one-element circuits, symbolised by the product .

The ‘full circuits’ (circuits and unions of disjoint circuits which involve all the variables) in three-variable systems.

Note that in a system that has no full circuits, the determinant of the Jacobian matrix is zero everywhere in phase space. In this condition (in which the matrix is singular and one or more eigenvalue is zero), the system of steady-state equations is underdetermined. This has been shown already by others [10,12], using another terminology.

3 A tight relation between feedback circuits and steady states

All the circuits of a system (and not only the ‘full circuits’) are present in the characteristic equation of the Jacobian matrix. Conversely, by definition of the characteristic equation of a matrix, it is easy to see that only those terms of the Jacobian matrix that belong to a circuit are found in its characteristic equation. The terms that do not take part in a circuit belong to products that vanish because they contain one or more zero terms. Thus, the coefficients of the characteristic equation depend explicitly only on the circuits of the system.

The off-circuit terms of the Jacobian matrix provide a one-directional connection between otherwise disjoint circuits; as a result, they may influence the steady-state values of the variables. Although they are not present in the Jacobian matrix, constant terms in the ODEs also influence the location of the steady states. Thus, both off-circuit terms of the Jacobian matrix and constant terms in the ODEs may play a role in the system's dynamics. However, their role is only indirect via their effect on the location of the steady states.

How the coefficients of the characteristic equation determine the stability properties of steady states has been studied for many years. Our main contribution is the recognition that there is a tight relation between the ‘logical structure’ (or circuitry) of a system and the number and stability of its steady states.

As a matter of fact [14], a system that reduces to a single full circuit has a steady state that can be qualified ‘characteristic of the circuit’ in the sense that its nature can be predicted from the structure of the circuit. For example, in three variables, it is easy to show analytically that an isolated three-element circuit will generate a saddle-focus, whatever the detailed nature of its components and the parameter values. This saddle-focus will be of type () or (), depending only on whether the circuit is positive or negative (in our notation, means that there is one real, positive, eigenvalue and a pair of complex conjugated eigenvalues with negative real parts, etc.).

Note that when a system is non-linear, a circuit can be positive or negative, depending on its location in phase space. If there is more than one steady state, the nature of each steady state will depend on the structure of the circuit at that location. For example, the very simple system {dx/dt=y, dy/dt=z, dz/dt=1−x2} has a single, three-element, circuit which is positive for x<0 and negative for x>0. There are two steady states, a saddle-focus of type (), characteristic of the positive modality of the 3-circuit and a saddle-focus of type () characteristic of the negative modality of the 3-circuit.

‘Nontrivial behaviour’ such as multistationarity, stable periodicity or deterministic chaos, systematically requires both appropriate circuits and an appropriate non-linearity. In particular, multistationarity requires, in addition to an adequate non-linearity, the presence of two full-circuits of opposite signs or else an ambiguity in a simple circuit (full or not) – the sign of a union of disjoint circuits is given by (−1)p+1, where p is the number of positive circuits in the union [10]. In practice, a union is positive if it has an odd number of positive circuits. This statement is slightly at variance with that given in [14], in which we write: “Multistationarity requires the presence of two full circuits of opposite signs, or of an ambiguous full circuit. The reason for the modification is that in the meantime we have identified a system: x=xy−1, y=x2−1, whose unique full circuit is positive everywhere (it is a peculiar type of circuit, also present in the Lorenz system, which is () for positive values of x and () for negative values of x). Yet, this system has two steady states. At first view, it is not obvious whether this multistationarity must be ascribed to the presence of an ambiguous circuit (the diagonal term a11=y) or to the peculiar character of the full circuit. That the first reason is presumably correct is suggested by the fact that the related system: x=xy−1, y=x2−1 (in which the circuit in a11 is not ambiguous) has a single steady state.

As for the non-linearity, it has to be located on a full circuit, but not necessarily on the positive circuit responsible for this nontrivial behaviour.

4 Emergence of complex behaviour by the mere decrease of the dissipative parameter

The considerations above have been used to re-visit the well-known Rössler equations [15–17]. In short, we have deciphered the logical structure of the Rössler system and built systems of similar structures, using a wide variety of different nonlinearities. It has been found that, provided an appropriate structure is present, chaotic dynamics is extremely robust toward changes in the precise nature of the nonlinearities used [18,19].

From analysing a number of chaotic systems, it was surmised [19,20] that a minimal logical requirement for the chaotic systems we have studied is the presence of negative circuit(s) to generate a stable periodicity and the presence of a positive circuit to ensure full or partial multistationarity (‘partial multistationarity’ in, say, yz, refers to a situation in which there is a range of values of x such that the steady-state equations for y and z have more than one real solution. For example, the system {dx/dt=−y, dy/dt=x+ay−z, dz/dt=y3−cz} has a single ‘full’ steady state (x=y=z=0) for any value of a and c. It can however be shown that for , there are three steady-state solutions in subspace yz.

However, as briefly described in Section 5, a single circuit can also generate a chaotic dynamics provided this circuit is positive or negative depending on its location in phase space.

Let us first consider a system still comprising diagonal terms in addition to a three-element circuit (note that one-element circuits cannot generate any periodicity by themselves): (1)

This system is related to but even slightly simpler than that described in Thomas [20]. The Jacobian matrix is:

Each of the three elements of type (1−3u2) is positive for , negative for . Thus, the three-element circuit itself is positive or negative according to a three-dimensional quincunx structure comprising 27 (33) domains. For b>1, there is a single stable steady state of the type (). As b decreases, the number of steady states increases up to 27, one per box and all unstable. In agreement with the previous comments about the relationship between feedback circuits and steady states, these 27 steady states are saddle foci of two types, () or (), depending on whether the circuit is positive or negative in the box considered. Trajectories percolate between these many steady states. As illustrated in Fig. 3, for values of parameter b lower than ca 0.29, the dynamics is chaotic (one or two coexistent chaotic attractors) with windows of complex periodic behaviour (one to six stable and more or less complex limit cycles).

Chaotic and multiperiodic attractors in system (1). (a) Coexistence of six limit cycles values (b=0.30). (b) Coexistence of two complex limit cycles (b=0.29). (c) Coexistence of two chaotic attractors (b=0.28). (d) A single chaotic attractor (b=0.27). (e) Coexistence of three limit cycles (b=0.24). (f) A single chaotic attractor (b=0.235). For the intermediate values, b=0.26 and b=0.25, the system exhibits, respectively, a single, highly complex limit cycle and a single chaotic attractor (not shown).

5 Labyrinth chaos: a single circuit can generate a chaotic dynamics provided the nonlinearities are such that the circuit can be positive or negative

The function (u−u3) used in Section 4, can be viewed as a caricature of (sinu), as the first two terms of the Taylor development of (sinu) are (u−u3/3!). In agreement with the observation that nonlinearities which are only roughly and locally similar in shape can generate similar trajectories, the behaviour of system: (2)

Chaotic attractors and labyrinth chaos in system (2). (a, b) Chaotic attractor for b=0.18 and b=0.01, respectively. (c) Labyrinth chaos for b=0.

Strikingly, the mere decrease of the unique parameter b results in a steady increase of the size and complexity of the attractor(s). In the limit case b=0, phase space is entirely filled with an infinite three-dimensional lattice of unstable steady states within which trajectories draw a chaotic (but not random) walk (Fig. 4c). In this conservative system, the chaotic trajectories can cover the whole phase space: there is thus no attractor in the usual sense of the word. This system is described in more details in [20].

Noteworthy, this type of systems can be generalised from 3 to 5, 7 or more dimensions. In five dimensions, the system yields a hyperchaos with 2 positive Lyapunov exponents (Thomas, Eiswirth, Kruel and Rössler, in preparation) and it can be anticipated that, more generally in 2n+1 dimensions, it will yield hyperchaos of order n.

6 Discussion

In this paper we define feedback circuits in terms of the elements of the Jacobian matrix of a system. We stress the fact that the signs of the circuits may depend on their location in phase space. In this context, our main interest is to investigate to what extent it is possible to relate the partition of phase space into domains homogeneous as regards the signs of the circuits to a partition according to the eigenvalues of the Jacobian matrix. Relatively simple relations between these two partitions have been derived for a number of typical cases (Kaufman and Thomas, in preparation). For these cases, we know the nature (real or complex eigenvalues, signs of their real parts) of any steady state that might be present in a given phase space compartment on the basis of the circuit structure of the system, independently of the mathematical form of the elements of these circuits.

Let us briefly come back to the conjectures proposed by one of us [4]. Initially, the formal demonstrations of the conjecture that a positive circuit is a necessary (but not sufficient) condition for multistationarity implied a condition of monotonicity of the functions describing the interactions involved in the circuits. This condition of monotonicity has now been relaxed and the demonstration extended [8] to include the more general statement that “a necessary condition for multistationarity is the presence of a circuit that is positive somewhere in phase space”. As regards the necessity of a negative circuit for stable periodicity, this conjecture should also be formulated in a more general way: “the presence of a negative circuit of length ⩾2 is required for stable periodicity [6,7], and the presence of a negative circuit (of whatever length) is required for stability [5], i.e. the existence of an attractor”.

From the biological viewpoint, the conjectures discussed above imply the following laws for biological processes:

- – insofar as differentiation and memory can be considered as the biological modalities of the more general concept of multistationarity [1], any process involving differentiative development or the storing or invocation of memory must have at least one positive circuit in its underlying logic;

- – homeostasis largo sensu (i.e., with or without oscillations), an extremely frequent situation in nature, requires the presence of a negative circuit.

As regards the potential biological interest of deterministic chaos, several phenomena, such as the waves observed in electrocardiograms (see, for instance, [21]) seem to provide experimental evidence for the occurrence of deterministic chaos in biology. However, there is certainly a deep qualitative difference between the intrinsic complexity of behaviour that can be generated by simple logical structures in the complete absence of external fluctuations (deterministic chaos stricto sensu), and the complexity of behaviour which is, if only partly, dependent on variable external input.

On the other hand, the logical structure of many well-documented regulatory systems or subsystems is significantly more complex than that required to obtain chaotic dynamics. It would thus be surprising not to find biological systems susceptible to generate a chaotic dynamics in some range of parameter values.

Even though such behaviour can occur, it can be expected to develop and persist only if it can provide some selective advantage. But what might be the selective advantage conferred by a chaotic behaviour? We feel that chaos (which is a kind of higher complex order – cf. the ‘Chaos’ which serves as a prologue in Die Schöpfung of Josef Haydn) is an extension of multiple periodicity, in which (i) the trajectories explore extensively some regions of phase space, (ii) the trajectories have occasionally access to ‘odd’ regions and yet (iii) stability is achieved in the sense that, even after the farthest excursions, the trajectories bring the system back into a limited region of the phase space. In this sense, deterministic chaos might display the selective advantages of an extended homeostasis.

Acknowledgements

We acknowledge financial support by the Belgian Program on Interuniversity Poles of Attraction and by the ‘Actions de recherches concertées’ 98-02 No. 220. This work was supported in part by the European Space Agency under contract number 90042.